IBM Platform HPC 4.1.1- Creating a network bridge on compute nodes

Applies to

- IBM Platform HPC V4.1.1

- IBM Platform Cluster Manager V4.1.1

Introduction

IBM Platform HPC provides the ability to customise the network configuration of compute nodes via Network Profiles. Network Profiles support a custom NIC script for each defined interface.

This provides the ability to configure network bonding and bridging. Here we provide a detailed example on how to configure a network bridge in a cluster managed by IBM Platform HPC.

IBM Platform HPC includes xCAT technology for cluster provisioning. xCAT includes a script (/install/postscripts/xHRM) which may be used to configure network bridging. This script is leveraged as a custom network script in the example below.

Example

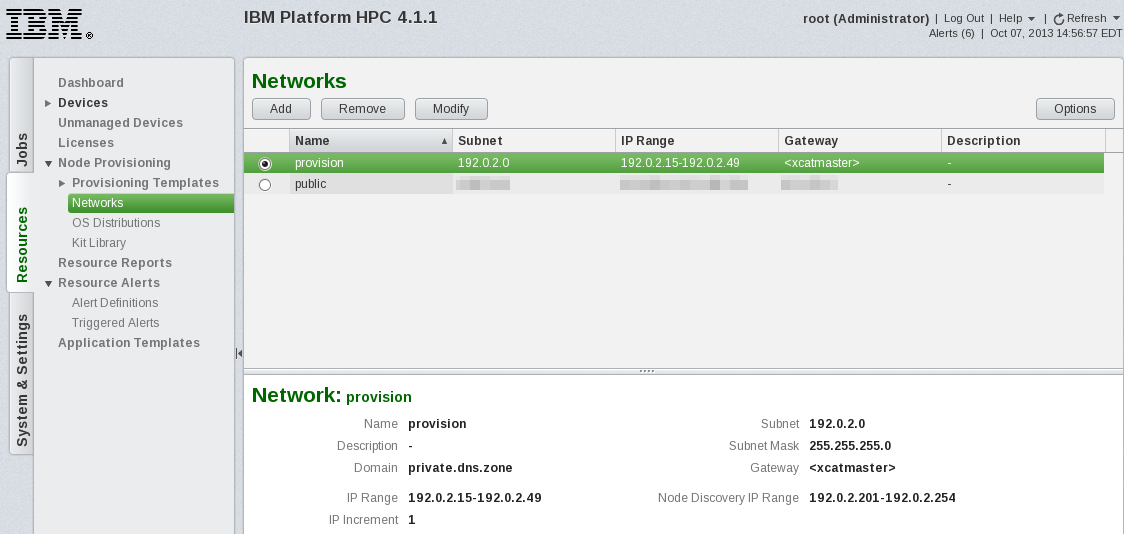

The configuration of the network provision may be viewed in the IBM Platform HPC Web console at: Resources > Node Provisioning > Networks.

The configuration of network provision may also be viewed using the lsdef CLI.

# lsdef -t network provision

Object name: provision

domain=private.dns.zone

dynamicrange=192.0.2.201-192.0.2.254

gateway=<xcatmaster>

mask=255.255.255.0

mgtifname=eth0

net=192.0.2.0

staticrange=192.0.2.15-192.0.2.49

staticrangeincrement=1

tftpserver=192.0.2.50The Network Profile default_network_profile which includes the network provision may be viewed in the IBM Platform HPC Web console at: Resources > Node Provisioning > Provisioning Templates > Network Profiles.

The Network Profile default_network_profile configuration may also be viewed using the lsdef CLI.

# lsdef -t group __NetworkProfile_default_network_profile

Object name: __NetworkProfile_default_network_profile

grouptype=static

installnic=eth0

members=

netboot=xnba

nichostnamesuffixes.eth0=-eth0

nichostnamesuffixes.bmc=-bmc

nicnetworks.eth0=provision

nicnetworks.bmc=provision

nictypes.eth0=Ethernet

nictypes.bmc=BMC

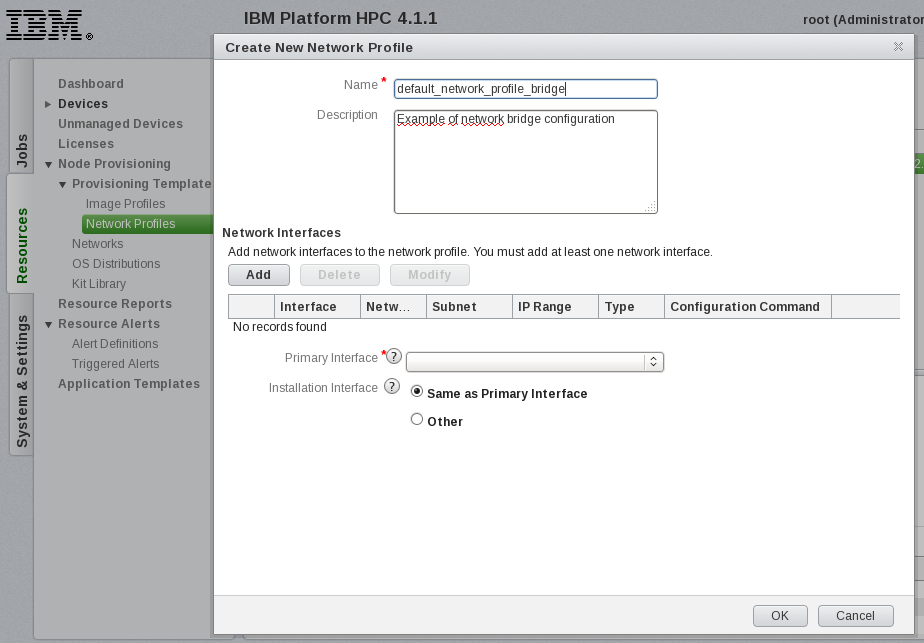

primarynic=eth0Here, we configure a network bridge br0 against eth0 for compute nodes using a new Network Profile.

- Add a new Network Profile with name default_network_profile_bridge via the IBM Platform HPC Web console. As an Administrator user, browse to Resources > Node Provisioning > Provisioning Templates > Network Profiles and select the button Add.

A total of three devices are required to be added:

-

eth0

-

Type: Ethernet

-

Network: provision

-

bmc

-

Type: BMC

-

Network: provision

-

br0

-

Type: Customized

-

Network: provision

-

Configuration Command: xHRM bridgeprereq eth0:br0 (creates network bridge br0 against eth0)

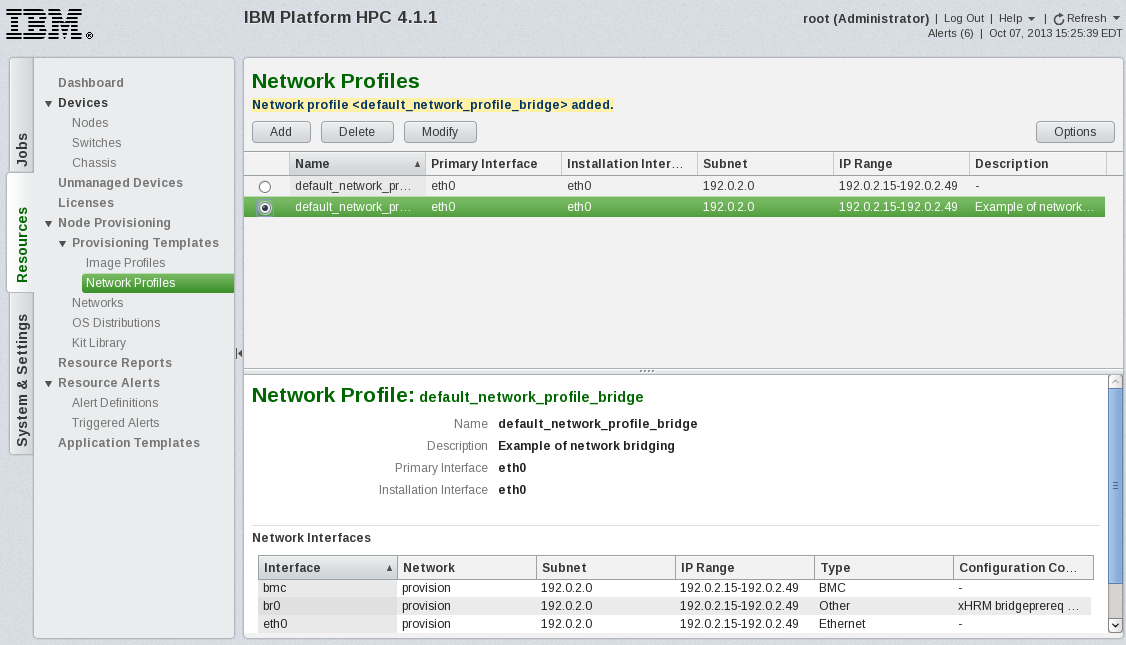

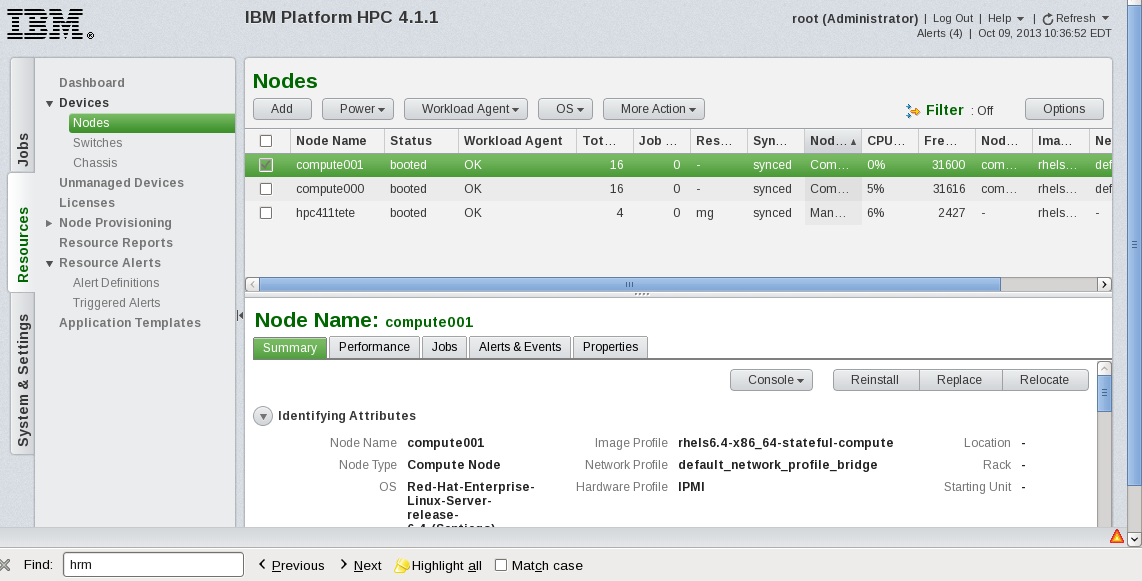

The new Network Profile default_network_profile_bridge is shown below.

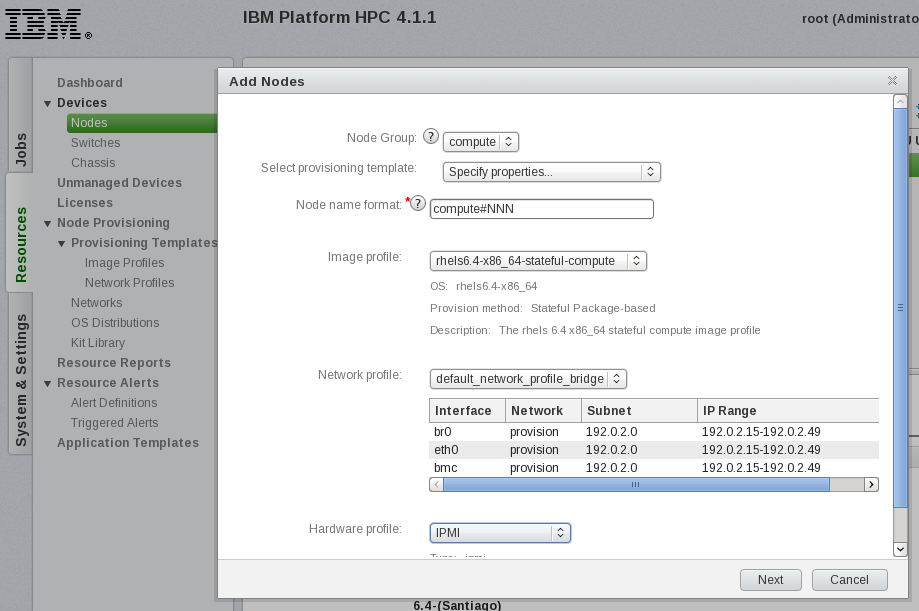

- Now we are ready to provision the nodes using the new Network Profile default_network_profile_bridge. To begin the process to add nodes, navigate in the the IBM Platform HPC Web console to Resources > Devices > Nodes and select the button Add. Within the Add Nodes window, select optionally Node Group compute and Select Specify Properties for the provisioning template. This will allow you to select the newly created network profile default_network_profile_bridge. Here the hardware profile IPMI and stateful provisioning are used.

Nodes are added using Auto discovery by PXE boot. Nodes may also be added using a node information file.

The nodes are powered on, detected by IBM Platform HPC and provisioned. In this example, two nodes compute000, compute001 are detected and subsequently provisioned.

- Once the nodes have been provisioned and complete their initial boot, they appear in the IBM Platform HPC Web console (Resources > Devices > Nodes) with Status booted and Workload Agent OK.

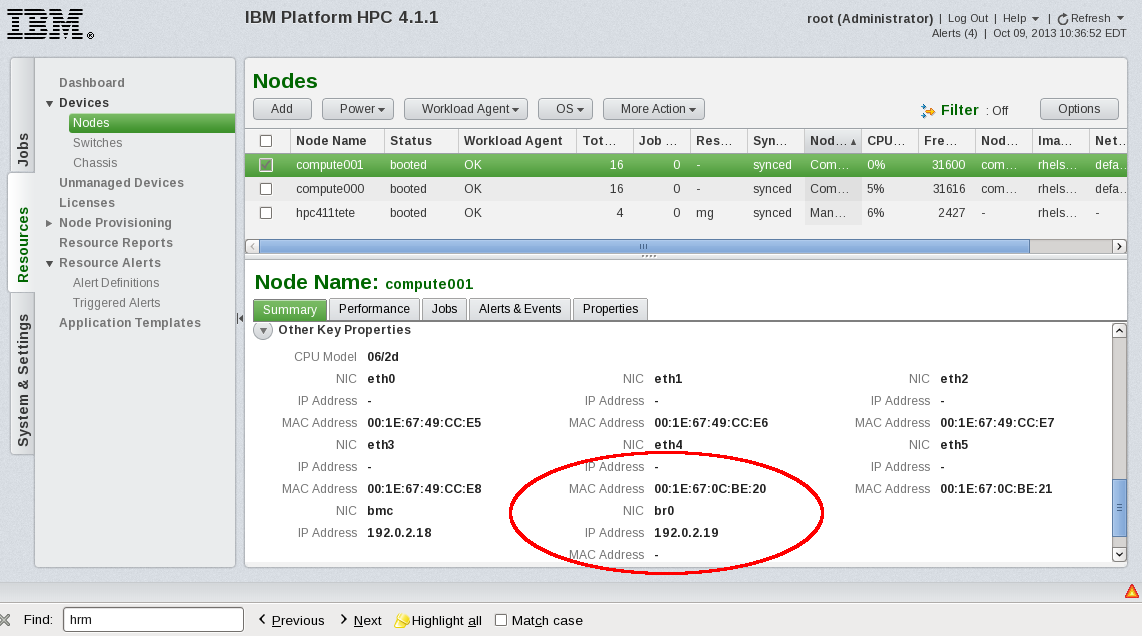

The network bridge is configured on the nodes as expected. We may see this via the IBM Platform HPC Web console by browsing to Resources > Devices > Nodes and selecting the Summary tab and scrolling to Other Key Properties.

Finally, using the CLI xdsh, we remotely execute ifconfig on node compute001to check the configuration of interface br0.

# xdsh compute001 ifconfig br0

compute001: br0 Link encap:Ethernet HWaddr 00:1E:67:49:CC:E5

compute001: inet addr:192.0.2.20 Bcast:192.0.2.255 Mask:255.255.255.0

compute001: inet6 addr: fe80::b03b:7cff:fe61:c1d4/64 Scope:Link

compute001: UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

compute001: RX packets:26273 errors:0 dropped:0 overruns:0 frame:0

compute001: TX packets:42490 errors:0 dropped:0 overruns:0 carrier:0

compute001: collisions:0 txqueuelen:0

compute001: RX bytes:11947435 (11.3 MiB) TX bytes:7827365 (7.4 MiB)

compute001:As expected, the compute nodes have been provisioned with a network bridge br0 configured.