IBM Platform HPC V4.1.1.1- Best Practices for Managing NVIDIA GPU devices

Summary

IBM Platform HPC V4.1.1.1 is easy-to-use, yet comprehensive technical computing infrastructure management software. It includes as standard GPU management capabilities including monitoring and workload scheduling for systems equipped with NVIDIA GPU devices.

At the time of writing, the latest available NVIDIA CUDA version is 5.5. Here we provide a series of best practices to deploy and manage a IBM Platform HPC cluster with servers equipped with NVIDIA GPU devices.

Introduction

The document serves as a guide to enabling IBM Platform HPC V4.1.1.1 GPU management capabilities in a cluster equipped with NVIDIA Tesla Kepler GPUs and with NVIDIA CUDA 5.5. The steps below assume familiarity with IBM Platform HPC V4.1.1.1 commands and concepts. As part of the procedure, a NVIDIA CUDA 5.5 Kit is prepared using an example template.

The example cluster defined in the Best Practices below is equipped as follows:

- Red Hat Enterprise Linux 6.4 (x86-64)

- IBM Platform HPC management node (hpc4111tete)

- Compute nodes:

- compute000 (NVIDIA Tesla K20c)

- compute001 (NVIDIA Tesla K40c)

- compute002 (NVIDIA Tesla K40c)

A. Create a NVIDIA CUDA Kit

It is assumed that the IBM Platform HPC V4.1.1.1 management node (hpctete4111) has been installed and that there are 3 compute nodes equipped with NVIDIA Tesla GPUs that will be provisioned as part of the procedure.

Here we provide the procedure to download the NVIDIA CUDA RPMs from NVIDIA. This is achieved by installing the NVIDIA CUDA RPM to configure the CUDA repository from which the NVIDIA CUDA RPMs will be downloaded for packaging as a Kit.

Additional details regarding IBM Platform HPC Kits can be found here.

The procedure assumes the following:

- The IBM Platform HPC management node has access to the Internet.

- The procedure has been validated with NVIDIA CUDA 5.5

- All commands are run as user root on the IBM Platform HPC management node, unless otherwise indicated.

- Install the yum downloadonly plugin on the IBM Platform HPC management node.

# yum install yum-plugin-downloadonly

Loaded plugins: product-id, refresh-packagekit, security, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package yum-plugin-downloadonly.noarch 0:1.1.30-14.el6 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

yum-plugin-downloadonly noarch 1.1.30-14.el6 xCAT-rhels6.4-path0 20 k

Transaction Summary

================================================================================

Install 1 Package(s)

Total download size: 20 k

Installed size: 21 k

Is this ok [y/N]: y

Downloading Packages:

yum-plugin-downloadonly-1.1.30-14.el6.noarch.rpm | 20 kB 00:00

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Installing : yum-plugin-downloadonly-1.1.30-14.el6.noarch 1/1

Verifying : yum-plugin-downloadonly-1.1.30-14.el6.noarch 1/1

Installed:

yum-plugin-downloadonly.noarch 0:1.1.30-14.el6

Complete!- On the IBM Platform HPC management node, install the NVIDIA CUDA RPM. This will configure the CUDA repository.

Note: The CUDA RPM can be downloaded here.

# rpm -ivh ./cuda-repo-rhel6-5.5-0.x86_64.rpm

Preparing... ########################################### [100%]

1:cuda-repo-rhel6 ########################################### [100%]- NVIDIA CUDA requires packages which are part of Extra Packages for Enterprise Linux (EPEL), including dkms. On the IBM Platform HPC management node, we now install the EPEL repository RPM.

Note: The EPEL RPM for RHEL 6 family can be downloaded here.

# rpm -ivh ./epel-release-6-8.noarch.rpm

warning: ./epel-release-6-8.noarch.rpm: Header V3 RSA/SHA256 Signature, key ID 0608b895: NOKEY

Preparing... ########################################### [100%]

1:epel-release ########################################### [100%]- Now, the NVIDIA CUDA Toolkit RPMs are downloaded via the OS yum command to the directory /root/CUDA5.5. The RPMs will be part of the NVIDIA CUDA Kit which is built in the subsequent steps.

Note: Details on using the yum –downloadonly option can be found here.

# yum install --downloadonly --downloaddir=/root/CUDA5.5/ cuda-5-5.x86_64

Loaded plugins: downloadonly, product-id, refresh-packagekit, security,

: subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

epel/metalink | 15 kB 00:00

epel | 4.2 kB 00:00

epel/primary_db | 5.7 MB 00:05

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package cuda-5-5.x86_64 0:5.5-22 will be installed

--> Processing Dependency: cuda-command-line-tools-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-headers-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-documentation-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-samples-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-visual-tools-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-core-libs-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-license-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-core-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-misc-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-extra-libs-5-5 = 5.5-22 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: xorg-x11-drv-nvidia-devel(x86-32) >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: xorg-x11-drv-nvidia-libs(x86-32) >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: xorg-x11-drv-nvidia-devel(x86-64) >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: nvidia-xconfig >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: cuda-driver >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: nvidia-settings >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Processing Dependency: nvidia-modprobe >= 319.00 for package: cuda-5-5-5.5-22.x86_64

--> Running transaction check

---> Package cuda-command-line-tools-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-core-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-core-libs-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-documentation-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-extra-libs-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-headers-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-license-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-misc-5-5.x86_64 0:5.5-22 will be installed

---> Package cuda-samples-5-5.x86_64 0:5.5-22 will be installed

--> Processing Dependency: mesa-libGLU-devel for package: cuda-samples-5-5-5.5-22.x86_64

--> Processing Dependency: freeglut-devel for package: cuda-samples-5-5-5.5-22.x86_64

--> Processing Dependency: libXi-devel for package: cuda-samples-5-5-5.5-22.x86_64

---> Package cuda-visual-tools-5-5.x86_64 0:5.5-22 will be installed

---> Package nvidia-modprobe.x86_64 0:319.37-1.el6 will be installed

---> Package nvidia-settings.x86_64 0:319.37-30.el6 will be installed

---> Package nvidia-xconfig.x86_64 0:319.37-27.el6 will be installed

---> Package xorg-x11-drv-nvidia.x86_64 1:319.37-2.el6 will be installed

--> Processing Dependency: xorg-x11-drv-nvidia-libs(x86-64) = 1:319.37-2.el6 for package: 1:xorg-x11-drv-nvidia-319.37-2.el6.x86_64

--> Processing Dependency: nvidia-kmod >= 319.37 for package: 1:xorg-x11-drv-nvidia-319.37-2.el6.x86_64

---> Package xorg-x11-drv-nvidia-devel.i686 1:319.37-2.el6 will be installed

---> Package xorg-x11-drv-nvidia-devel.x86_64 1:319.37-2.el6 will be installed

---> Package xorg-x11-drv-nvidia-libs.i686 1:319.37-2.el6 will be installed

--> Processing Dependency: libX11.so.6 for package: 1:xorg-x11-drv-nvidia-libs-319.37-2.el6.i686

--> Processing Dependency: libz.so.1 for package: 1:xorg-x11-drv-nvidia-libs-319.37-2.el6.i686

--> Processing Dependency: libXext.so.6 for package: 1:xorg-x11-drv-nvidia-libs-319.37-2.el6.i686

--> Running transaction check

---> Package freeglut-devel.x86_64 0:2.6.0-1.el6 will be installed

--> Processing Dependency: freeglut = 2.6.0-1.el6 for package: freeglut-devel-2.6.0-1.el6.x86_64

--> Processing Dependency: libGL-devel for package: freeglut-devel-2.6.0-1.el6.x86_64

--> Processing Dependency: libglut.so.3()(64bit) for package: freeglut-devel-2.6.0-1.el6.x86_64

---> Package libX11.i686 0:1.5.0-4.el6 will be installed

--> Processing Dependency: libxcb.so.1 for package: libX11-1.5.0-4.el6.i686

---> Package libXext.i686 0:1.3.1-2.el6 will be installed

---> Package libXi-devel.x86_64 0:1.6.1-3.el6 will be installed

---> Package mesa-libGLU-devel.x86_64 0:9.0-0.7.el6 will be installed

---> Package nvidia-kmod.x86_64 1:319.37-1.el6 will be installed

--> Processing Dependency: kernel-devel for package: 1:nvidia-kmod-319.37-1.el6.x86_64

--> Processing Dependency: dkms for package: 1:nvidia-kmod-319.37-1.el6.x86_64

---> Package xorg-x11-drv-nvidia-libs.x86_64 1:319.37-2.el6 will be installed

---> Package zlib.i686 0:1.2.3-29.el6 will be installed

--> Running transaction check

---> Package dkms.noarch 0:2.2.0.3-17.el6 will be installed

---> Package freeglut.x86_64 0:2.6.0-1.el6 will be installed

---> Package kernel-devel.x86_64 0:2.6.32-358.el6 will be installed

---> Package libxcb.i686 0:1.8.1-1.el6 will be installed

--> Processing Dependency: libXau.so.6 for package: libxcb-1.8.1-1.el6.i686

---> Package mesa-libGL-devel.x86_64 0:9.0-0.7.el6 will be installed

--> Processing Dependency: pkgconfig(libdrm) >= 2.4.24 for package: mesa-libGL-devel-9.0-0.7.el6.x86_64

--> Processing Dependency: pkgconfig(xxf86vm) for package: mesa-libGL-devel-9.0-0.7.el6.x86_64

--> Processing Dependency: pkgconfig(xfixes) for package: mesa-libGL-devel-9.0-0.7.el6.x86_64

--> Processing Dependency: pkgconfig(xdamage) for package: mesa-libGL-devel-9.0-0.7.el6.x86_64

--> Running transaction check

---> Package libXau.i686 0:1.0.6-4.el6 will be installed

---> Package libXdamage-devel.x86_64 0:1.1.3-4.el6 will be installed

---> Package libXfixes-devel.x86_64 0:5.0-3.el6 will be installed

---> Package libXxf86vm-devel.x86_64 0:1.1.2-2.el6 will be installed

---> Package libdrm-devel.x86_64 0:2.4.39-1.el6 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

cuda-5-5 x86_64 5.5-22 cuda 3.3 k

Installing for dependencies:

cuda-command-line-tools-5-5 x86_64 5.5-22 cuda 6.4 M

cuda-core-5-5 x86_64 5.5-22 cuda 29 M

cuda-core-libs-5-5 x86_64 5.5-22 cuda 230 k

cuda-documentation-5-5 x86_64 5.5-22 cuda 79 M

cuda-extra-libs-5-5 x86_64 5.5-22 cuda 120 M

cuda-headers-5-5 x86_64 5.5-22 cuda 1.1 M

cuda-license-5-5 x86_64 5.5-22 cuda 25 k

cuda-misc-5-5 x86_64 5.5-22 cuda 1.7 M

cuda-samples-5-5 x86_64 5.5-22 cuda 150 M

cuda-visual-tools-5-5 x86_64 5.5-22 cuda 268 M

dkms noarch 2.2.0.3-17.el6 epel 74 k

freeglut x86_64 2.6.0-1.el6 xCAT-rhels6.4-path0 172 k

freeglut-devel x86_64 2.6.0-1.el6 xCAT-rhels6.4-path0 112 k

kernel-devel x86_64 2.6.32-358.el6 xCAT-rhels6.4-path0 8.1 M

libX11 i686 1.5.0-4.el6 xCAT-rhels6.4-path0 590 k

libXau i686 1.0.6-4.el6 xCAT-rhels6.4-path0 24 k

libXdamage-devel x86_64 1.1.3-4.el6 xCAT-rhels6.4-path0 9.3 k

libXext i686 1.3.1-2.el6 xCAT-rhels6.4-path0 34 k

libXfixes-devel x86_64 5.0-3.el6 xCAT-rhels6.4-path0 12 k

libXi-devel x86_64 1.6.1-3.el6 xCAT-rhels6.4-path0 102 k

libXxf86vm-devel x86_64 1.1.2-2.el6 xCAT-rhels6.4-path0 17 k

libdrm-devel x86_64 2.4.39-1.el6 xCAT-rhels6.4-path0 77 k

libxcb i686 1.8.1-1.el6 xCAT-rhels6.4-path0 114 k

mesa-libGL-devel x86_64 9.0-0.7.el6 xCAT-rhels6.4-path0 507 k

mesa-libGLU-devel x86_64 9.0-0.7.el6 xCAT-rhels6.4-path0 111 k

nvidia-kmod x86_64 1:319.37-1.el6 cuda 4.0 M

nvidia-modprobe x86_64 319.37-1.el6 cuda 14 k

nvidia-settings x86_64 319.37-30.el6 cuda 847 k

nvidia-xconfig x86_64 319.37-27.el6 cuda 89 k

xorg-x11-drv-nvidia x86_64 1:319.37-2.el6 cuda 5.1 M

xorg-x11-drv-nvidia-devel i686 1:319.37-2.el6 cuda 116 k

xorg-x11-drv-nvidia-devel x86_64 1:319.37-2.el6 cuda 116 k

xorg-x11-drv-nvidia-libs i686 1:319.37-2.el6 cuda 28 M

xorg-x11-drv-nvidia-libs x86_64 1:319.37-2.el6 cuda 28 M

zlib i686 1.2.3-29.el6 xCAT-rhels6.4-path0 73 k

Transaction Summary

================================================================================

Install 36 Package(s)

Total download size: 731 M

Installed size: 1.6 G

Is this ok [y/N]: y

Downloading Packages:

(1/36): cuda-5-5-5.5-22.x86_64.rpm | 3.3 kB 00:00

(2/36): cuda-command-line-tools-5-5-5.5-22.x86_64.rpm | 6.4 MB 00:06

(3/36): cuda-core-5-5-5.5-22.x86_64.rpm | 29 MB 00:31

(4/36): cuda-core-libs-5-5-5.5-22.x86_64.rpm | 230 kB 00:00

(5/36): cuda-documentation-5-5-5.5-22.x86_64.rpm | 79 MB 01:28

(6/36): cuda-extra-libs-5-5-5.5-22.x86_64.rpm | 120 MB 02:17

(7/36): cuda-headers-5-5-5.5-22.x86_64.rpm | 1.1 MB 00:01

(8/36): cuda-license-5-5-5.5-22.x86_64.rpm | 25 kB 00:00

(9/36): cuda-misc-5-5-5.5-22.x86_64.rpm | 1.7 MB 00:01

(10/36): cuda-samples-5-5-5.5-22.x86_64.rpm | 150 MB 02:51

(11/36): cuda-visual-tools-5-5-5.5-22.x86_64.rpm | 268 MB 05:06

(12/36): dkms-2.2.0.3-17.el6.noarch.rpm | 74 kB 00:00

(13/36): freeglut-2.6.0-1.el6.x86_64.rpm | 172 kB 00:00

(14/36): freeglut-devel-2.6.0-1.el6.x86_64.rpm | 112 kB 00:00

(15/36): kernel-devel-2.6.32-358.el6.x86_64.rpm | 8.1 MB 00:00

(16/36): libX11-1.5.0-4.el6.i686.rpm | 590 kB 00:00

(17/36): libXau-1.0.6-4.el6.i686.rpm | 24 kB 00:00

(18/36): libXdamage-devel-1.1.3-4.el6.x86_64.rpm | 9.3 kB 00:00

(19/36): libXext-1.3.1-2.el6.i686.rpm | 34 kB 00:00

(20/36): libXfixes-devel-5.0-3.el6.x86_64.rpm | 12 kB 00:00

(21/36): libXi-devel-1.6.1-3.el6.x86_64.rpm | 102 kB 00:00

(22/36): libXxf86vm-devel-1.1.2-2.el6.x86_64.rpm | 17 kB 00:00

(23/36): libdrm-devel-2.4.39-1.el6.x86_64.rpm | 77 kB 00:00

(24/36): libxcb-1.8.1-1.el6.i686.rpm | 114 kB 00:00

(25/36): mesa-libGL-devel-9.0-0.7.el6.x86_64.rpm | 507 kB 00:00

(26/36): mesa-libGLU-devel-9.0-0.7.el6.x86_64.rpm | 111 kB 00:00

(27/36): nvidia-kmod-319.37-1.el6.x86_64.rpm | 4.0 MB 00:13

(28/36): nvidia-modprobe-319.37-1.el6.x86_64.rpm | 14 kB 00:00

(29/36): nvidia-settings-319.37-30.el6.x86_64.rpm | 847 kB 00:01

(30/36): nvidia-xconfig-319.37-27.el6.x86_64.rpm | 89 kB 00:00

(31/36): xorg-x11-drv-nvidia-319.37-2.el6.x86_64.rpm | 5.1 MB 00:17

(32/36): xorg-x11-drv-nvidia-devel-319.37-2.el6.i686.rpm | 116 kB 00:00

(33/36): xorg-x11-drv-nvidia-devel-319.37-2.el6.x86_64.r | 116 kB 00:00

(34/36): xorg-x11-drv-nvidia-libs-319.37-2.el6.i686.rpm | 28 MB 00:31

(35/36): xorg-x11-drv-nvidia-libs-319.37-2.el6.x86_64.rp | 28 MB 02:15

(36/36): zlib-1.2.3-29.el6.i686.rpm | 73 kB 00:00

--------------------------------------------------------------------------------

Total 786 kB/s | 731 MB 15:53

exiting because --downloadonly specified- Add dkms as a custom package to the default image profile. All other dependencies for NVIDIA CUDA are part of the OS distribution (RHEL 6.4).

Note that the plcclient.sh CLI is used here to refresh the imageprofile in the IBM Platform HPC Web console.

# cp /root/CUDA5.5/dkms-2.2.0.3-20.el6.noarch.rpm /install/contrib/rhels6.4/x86_64/

# plcclient.sh -d "pcmimageprofileloader"

Loaders startup successfully.- Now we are ready to create the NVIDIA CUDA 5.5 Kit for IBM Platform HPC.

The Kit will contain 3 components:

- NVIDIA Display Driver

- NVIDIA CUDA 5.5 Toolkit

- NVIDIA CUDA 5.5 Samples and Documentation

The buildkit CLI is used to create a new kit template. The template will be used as the basis for the NVIDIA CUDA 5.5 Kit. The buildkit CLI is executed within the directory /root.

# buildkit create kit-CUDA

Kit template for kit-CUDA created in /root/kit-CUDA directory- Copy to /root/kit-CUDA the buildkit.conf file provided in Appendix A.

Note that a backup of the original buildkit.conf is performed.

# mv /root/kit-CUDA/buildkit.conf /root/kit-CUDA/buildkit.conf.bak

# cp buildkit.conf /root/kit-CUDA- Copy the NVIDIA RPMs to the kit source_packages directory. Here we create subdirectories for the RPMs matching each respective component, then copy the correct RPMs into place.

# mkdir /root/kit-CUDA/source_packages/cuda-samples

# mkdir /root/kit-CUDA/source_packages/cuda-toolkit

# mkdir /root/kit-CUDA/source_packages/nvidia-driver

# cp /root/CUDA5.5/nvidia-kmod* /root/kit-CUDA/source_packages/nvidia-driver/

# cp /root/CUDA5.5/nvidia-modprobe* /root/kit-CUDA/source_packages/nvidia-driver/

# cp /root/CUDA5.5/nvidia-settings* /root/kit-CUDA/source_packages/nvidia-driver/

# cp /root/CUDA5.5/nvidia-xconfig* /root/kit-CUDA/source_packages/nvidia-driver/

# cp /root/CUDA5.5/xorg-x11-drv-nvidia-319.37-2.el6.x86_64* /root/kit-CUDA/source_packages/nvidia-driver/

# cp /root/CUDA5.5/xorg-x11-drv-nvidia-devel-319.37-2.el6.x86_64* /root/kit-CUDA/source_packages/nvidia-driver/

# cp /root/CUDA5.5/cuda-command-line-tools* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-core* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-extra* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-headers* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-license* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-misc* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-visual-tools* /root/kit-CUDA/source_packages/cuda-toolkit/

# cp /root/CUDA5.5/cuda-documentation* /root/kit-CUDA/source_packages/cuda-samples/

# cp /root/CUDA5.5/cuda-samples* /root/kit-CUDA/source_packages/cuda-samples/- Copy in place a script required to create a symbolic link /usr/lib64/nvidia/libnvidia-ml.so, required by the LSF elim script for GPUs. The example script createsymlink.sh can be found in Appendix B.

# mkdir /root/kit-CUDA/scripts/nvidia

# cp createsymlink.sh /root/kit-CUDA/scripts/nvidia/

# chmod 755 /root/kit-CUDA/scripts/nvidia/createsymlink.sh- Build the kit repository and the final kit package.

# cd /root/kit-CUDA

# buildkit buildrepo all

Spawning worker 0 with 22 pkgs

Workers Finished

Gathering worker results

Saving Primary metadata

Saving file lists metadata

Saving other metadata

Generating sqlite DBs

Sqlite DBs complete

# buildkit buildtar

Kit tar file /root/kit-CUDA/kit-CUDA-5.5-1.tar.bz2 successfully built.B. Deploying the NVIDIA CUDA 5.5 Kit

In the preceding section, a detailed procedure was provided to download NVIDIA CUDA 5.5 and to package the NVIDIA CUDA software as a kit for deployment in cluster managed by IBM Platform HPC.

Here detailed steps are provided to install and deploy the kit. Screenshots are provided where necessary to illustrate certain operations.

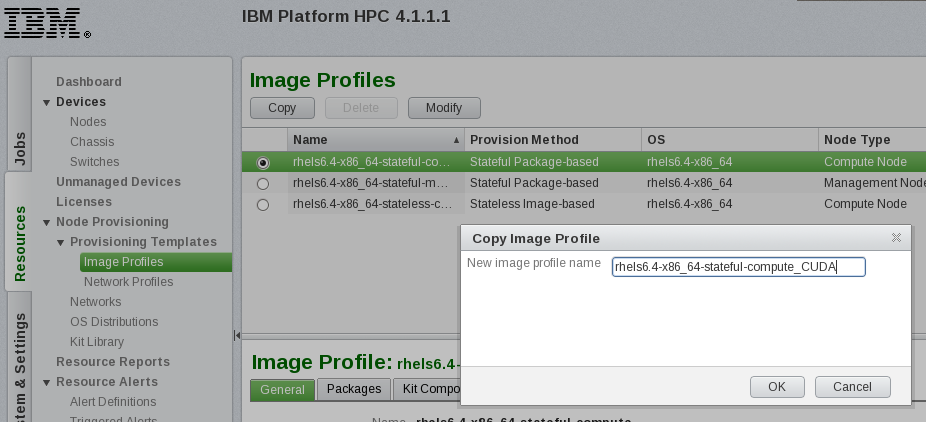

- In the IBM Platform HPC Web portal, select Resource > Provisioning Templates >Image Profiles and click on Copy to create a copy of the profile rhels6.4-x86_64-stateful-compute. The new image profile will be used for nodes equipped with NVIDIA GPUs. The new image profile name is rhels6.4-x86_64-stateful-compute_CUDA.

-

Next, we add the CUDA 5.5 Kit to the Kit Library. In the IBM Platform HPC Web portal, select Resources > Node Provisioning > Kit Library and click Add. Next Browse to the CUDA 5.5 Kit (kit-CUDA-5.5-1.tar.bz2) and add to the Kit Library.

-

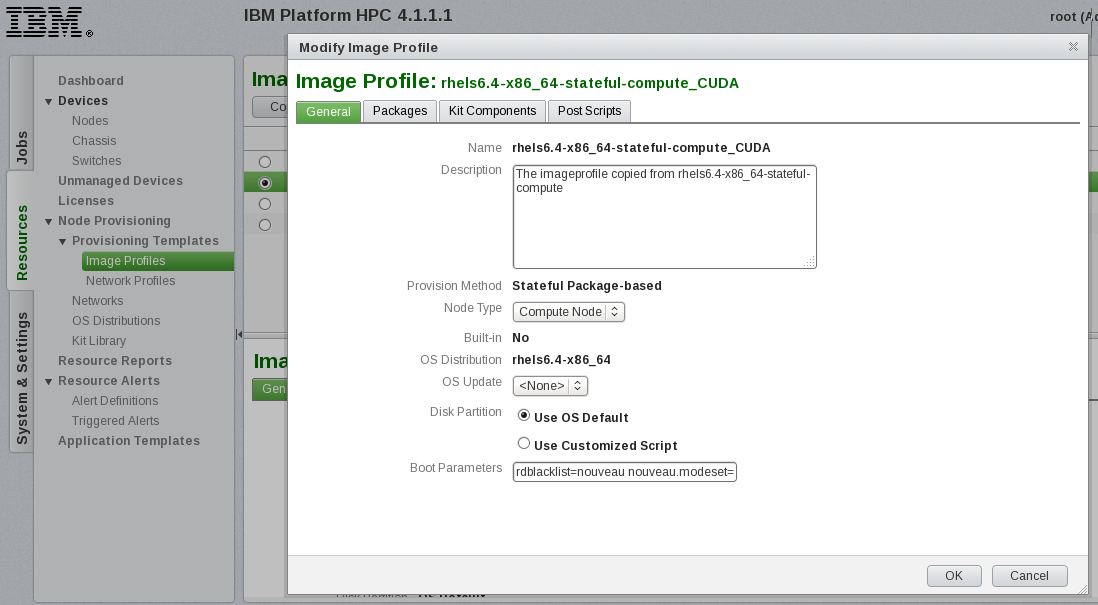

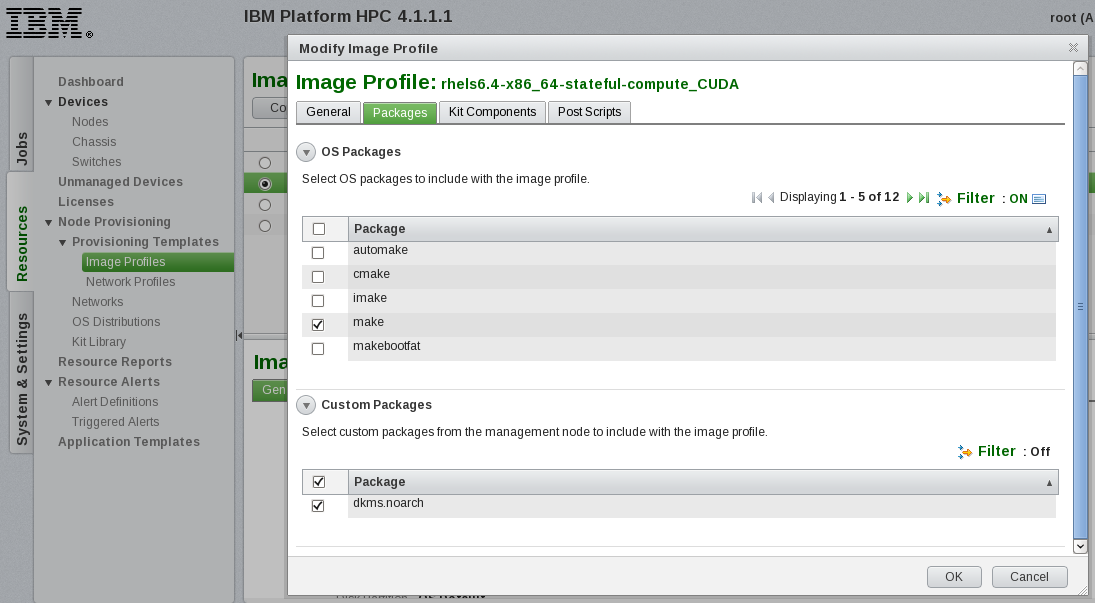

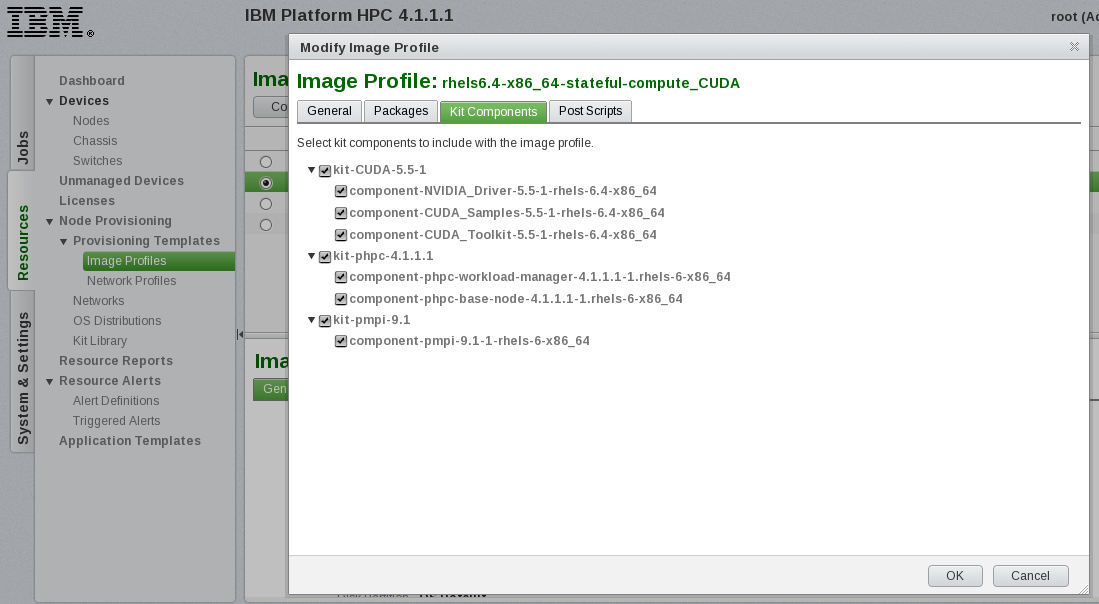

The image profile rhels6.4-x86_64-stateful-compute_CUDA is updated as follows:

- select the custom package dkms

- select the OS package make

- specify Boot Parameters: rdblacklist=nouveau nouveau.modeset=0

- enable CUDA Kit components

In the IBM Platform HPC Web portal, browse to Resources > Node Provisioning > Provisioning Templates > Image Profiles, select rhels6.4-x86_64-stateful-compute_CUDA and click on Modify.

Under General, specify the Boot Parmaeters rdblacklist=nouveau nouveau.modeset=0

Under Packages > Custom Packages select dkms.noarch Under Packages > OS Packages select make (note that the Filter can be used here)

Under Kit Components select:

- component-NVIDIA_Driver-5.5-1-rhels-6.4-x86_64

- component-CUDA_Samples-5.5-1-rhels-6.4-x86_64

- component-CUDA_Toolkit-5.5-1-rhels-6.4-x86_64

Note that minimally NVIDIA_Driver and CUDA_Toolkit should be selected.

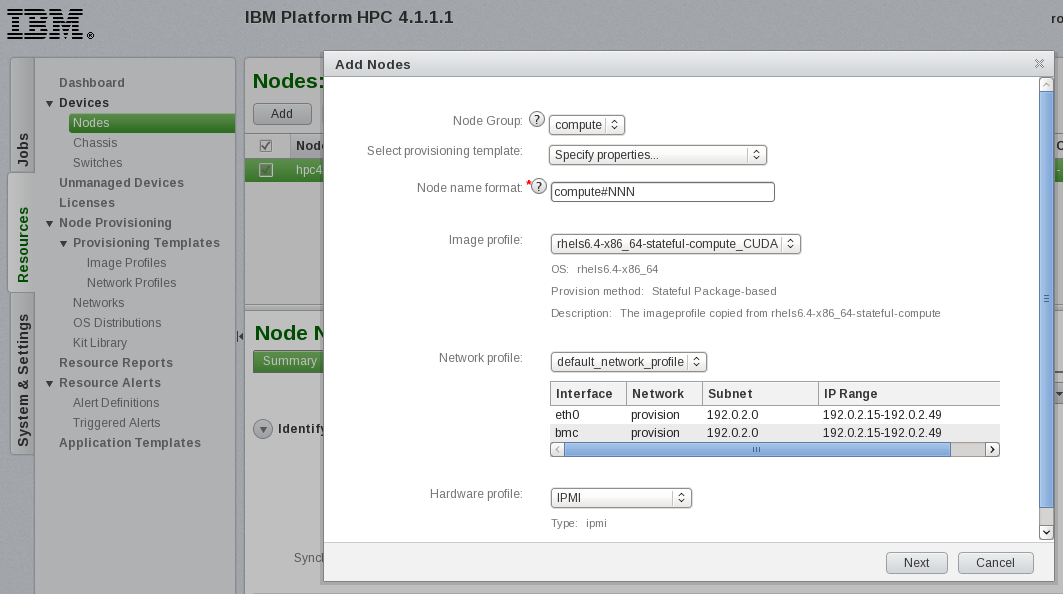

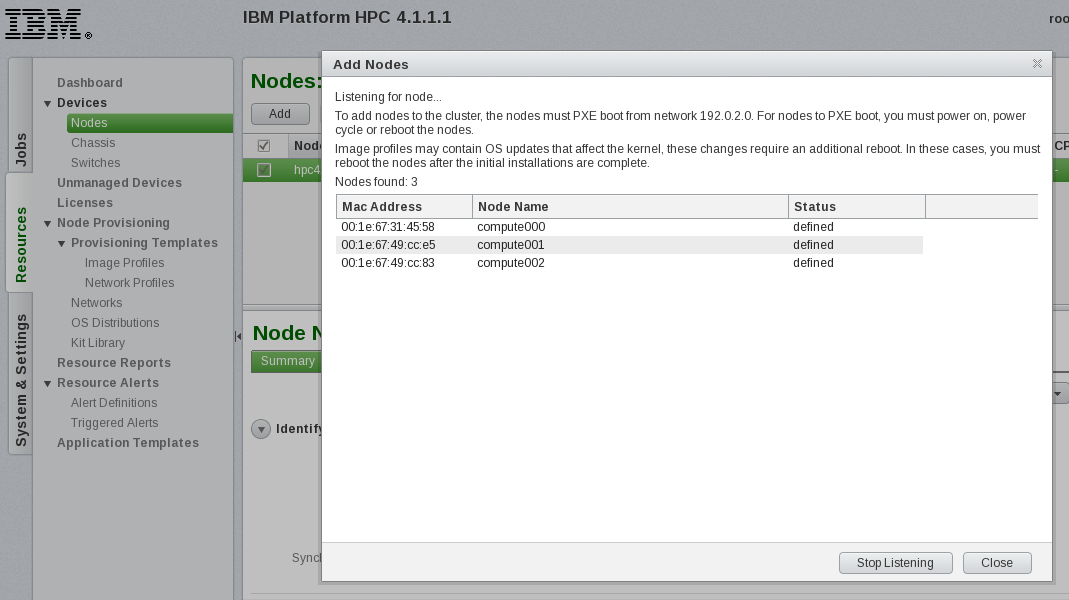

- Next, the nodes equipped with NVIDIA GPUs are provisioned. Here Auto discovery by PXE boot is used to provision the nodes using the newly created image profile rhels6.4-x86_64-stateful-compute_CUDA.

In the IBM Platform HPC Web console select Resources > Devices > Nodes and click on the Add button. Here we specify the following provisioning template properties:

- Image profile rhels6.4-x86_64-stateful-compute_CUDA

- Network profile default_network_profile

- Hardware profile IPMI

Then we specify Auto discovery by PXE boot and power on the nodes. compute000-compute002 are provisioned including the NVIDIA CUDA 5.5 Kit components:

- component-NVIDIA_Driver-5.5-1-rhels-6.4-x86_64

- component-CUDA_Samples-5.5-1-rhels-6.4-x86_64

- component-CUDA_Toolkit-5.5-1-rhels-6.4-x86_64

- After provisioning, check the installation of the NVIDIA Driver and CUDA stack. xdsh is used here to concurrently execute the NVIDIA CLI nvidia-smi across the compute nodes.

# xdsh compute00[0-2] nvidia-smi -L

compute000: GPU 0: Tesla K20c (UUID: GPU-46d00ece-a26d-5f8c-c695-23e525a88075)

compute002: GPU 0: Tesla K40c (UUID: GPU-e3ac8955-6a76-12e1-0786-ec336b0b3824)

compute001: GPU 0: Tesla K40c (UUID: GPU-ba2733d8-4473-0a69-af14-e80008568a42)- Next, compile and execute the NVIDIA CUDA deviceQuery sample. Be sure to check the output for any errors.

# xdsh compute00[0-2] -f 1 "cd /usr/local/cuda-5.5/samples/1_Utilities/deviceQuery;make"

compute000: /usr/local/cuda-5.5/bin/nvcc -ccbin g++ -I../../common/inc -m64 -gencode arch=compute_10,code=sm_10 -gencode arch=compute_20,code=sm_20 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=\"sm_35,compute_35\" -o deviceQuery.o -c deviceQuery.cpp

compute000: /usr/local/cuda-5.5/bin/nvcc -ccbin g++ -m64 -o deviceQuery deviceQuery.o

compute000: mkdir -p ../../bin/x86_64/linux/release

compute000: cp deviceQuery ../../bin/x86_64/linux/release

compute001: /usr/local/cuda-5.5/bin/nvcc -ccbin g++ -I../../common/inc -m64 -gencode arch=compute_10,code=sm_10 -gencode arch=compute_20,code=sm_20 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=\"sm_35,compute_35\" -o deviceQuery.o -c deviceQuery.cpp

compute001: /usr/local/cuda-5.5/bin/nvcc -ccbin g++ -m64 -o deviceQuery deviceQuery.o

compute001: mkdir -p ../../bin/x86_64/linux/release

compute001: cp deviceQuery ../../bin/x86_64/linux/release

compute002: /usr/local/cuda-5.5/bin/nvcc -ccbin g++ -I../../common/inc -m64 -gencode arch=compute_10,code=sm_10 -gencode arch=compute_20,code=sm_20 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=\"sm_35,compute_35\" -o deviceQuery.o -c deviceQuery.cpp

compute002: /usr/local/cuda-5.5/bin/nvcc -ccbin g++ -m64 -o deviceQuery deviceQuery.o

compute002: mkdir -p ../../bin/x86_64/linux/release

compute002: cp deviceQuery ../../bin/x86_64/linux/release

# xdsh compute00[0-2] -f 1 /usr/local/cuda-5.5/samples/1_Utilities/deviceQuery/deviceQuery

compute000: /usr/local/cuda-5.5/samples/1_Utilities/deviceQuery/deviceQuery Starting...

compute000:

compute000: CUDA Device Query (Runtime API) version (CUDART static linking)

compute000:

compute000: Detected 1 CUDA Capable device(s)

compute000:

compute000: Device 0: "Tesla K20c"

compute000: CUDA Driver Version / Runtime Version 5.5 / 5.5

compute000: CUDA Capability Major/Minor version number: 3.5

compute000: Total amount of global memory: 4800 MBytes (5032706048 bytes)

compute000: (13) Multiprocessors, (192) CUDA Cores/MP: 2496 CUDA Cores

compute000: GPU Clock rate: 706 MHz (0.71 GHz)

compute000: Memory Clock rate: 2600 Mhz

compute000: Memory Bus Width: 320-bit

compute000: L2 Cache Size: 1310720 bytes

compute000: Maximum Texture Dimension Size (x,y,z) 1D=(65536), 2D=(65536, 65536), 3D=(4096, 4096, 4096)

compute000: Maximum Layered 1D Texture Size, (num) layers 1D=(16384), 2048 layers

compute000: Maximum Layered 2D Texture Size, (num) layers 2D=(16384, 16384), 2048 layers

compute000: Total amount of constant memory: 65536 bytes

compute000: Total amount of shared memory per block: 49152 bytes

compute000: Total number of registers available per block: 65536

compute000: Warp size: 32

compute000: Maximum number of threads per multiprocessor: 2048

compute000: Maximum number of threads per block: 1024

compute000: Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

compute000: Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

compute000: Maximum memory pitch: 2147483647 bytes

compute000: Texture alignment: 512 bytes

compute000: Concurrent copy and kernel execution: Yes with 2 copy engine(s)

compute000: Run time limit on kernels: No

compute000: Integrated GPU sharing Host Memory: No

compute000: Support host page-locked memory mapping: Yes

compute000: Alignment requirement for Surfaces: Yes

compute000: Device has ECC support: Enabled

compute000: Device supports Unified Addressing (UVA): Yes

compute000: Device PCI Bus ID / PCI location ID: 10 / 0

compute000: Compute Mode:

compute000: < Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

compute000:

compute000: deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 5.5, CUDA Runtime Version = 5.5, NumDevs = 1, Device0 = Tesla K20c

compute000: Result = PASS

compute001: /usr/local/cuda-5.5/samples/1_Utilities/deviceQuery/deviceQuery Starting...

....

....

compute002: /usr/local/cuda-5.5/samples/1_Utilities/deviceQuery/deviceQuery Starting...

....

....

compute002: deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 5.5, CUDA Runtime Version = 5.5, NumDevs = 1, Device0 = Tesla K40c

compute002: Result = PASSUp to this point, we have produced a CUDA 5.5 Kit, and deployed nodes including CUDA 5.5. Furthermore, we have tested the correct installation of the NVIDIA driver as well as the CUDA Sample deviceQuery example.

C. Enable Management of NVIDIA GPU devices

The IBM Platform HPC Administration Guide contains detailed steps on enabling NVIDIA GPU monitoring. This can be found in Chapter 10 in the section titled Enabling the GPU.

Below, as the user root, we make the necessary updates to the IBM Platform HPC (and workload manager) configuration files to enable LSF GPU support.

- Define the GPU processing resources reported by ELIM in $LSF_ENVDIR/lsf.shared. You can define a new resources section to include the following resource definitions:

Begin Resource

RESOURCENAME TYPE INTERVAL INCREASING CONSUMABLE DESCRIPTION # Keywords

ngpus Numeric 60 N (Number of GPUs)

gpushared Numeric 60 N (Number of GPUs in Shared Mode)

gpuexcl_thrd Numeric 60 N (Number of GPUs in Exclusive thread Mode)

gpuprohibited Numeric 60 N (Number of GPUs in Prohibited Mode)

gpuexcl_proc Numeric 60 N (Number of GPUs in Exclusive process Mode)

gpumode0 Numeric 60 N (Mode of 1st GPU)

gputemp0 Numeric 60 Y (Temperature of 1st GPU)

gpuecc0 Numeric 60 N (ECC errors on 1st GPU)

gpumode1 Numeric 60 N (Mode of 2nd GPU)

gputemp1 Numeric 60 Y (Temperature of 2nd GPU)

gpuecc1 Numeric 60 N (ECC errors on 2nd GPU)

gpumode2 Numeric 60 N (Mode of 3rd GPU)

gputemp2 Numeric 60 Y (Temperature of 3rd GPU)

gpuecc2 Numeric 60 N (ECC errors on 3rd GPU)

gpumode3 Numeric 60 N (Mode of 4th GPU)

gputemp3 Numeric 60 Y (Temperature of 4th GPU)

gpuecc3 Numeric 60 N (ECC errors on 4th GPU)

gpudriver String 60 () (GPU driver version)

gpumodel0 String 60 () (Model name of 1st GPU)

gpumodel1 String 60 () (Model name of 2nd GPU)

gpumodel2 String 60 () (Model name of 3rd GPU)

gpumodel3 String 60 () (Model name of 4th GPU)

End Resource- Define the following resource map to support GPU processing in $LSF_ENVDIR/lsf.cluster.cluster-name, where cluster-name is the name of your cluster.

Begin ResourceMap

RESOURCENAME LOCATION

ngpus [default]

gpushared [default]

gpuexcl_thrd [default]

gpuprohibited [default]

gpuexcl_proc [default]

gpumode0 [default]

gputemp0 [default]

gpuecc0 [default]

gpumode1 [default]

gputemp1 [default]

gpuecc1 [default]

gpumode2 [default]

gputemp2 [default]

gpuecc2 [default]

gpumode3 [default]

gputemp3 [default]

gpuecc3 [default]

gpumodel0 [default]

gpumodel1 [default]

gpumodel2 [default]

gpumodel3 [default]

gpudriver [default]

End ResourceMap- To configure the newly defined resources, run the following command on the IBM Platform HPC management node. Here we must restart the LIMs on all hosts.

# lsadmin reconfig

Checking configuration files ...

No errors found.

Restart only the master candidate hosts? [y/n] n

Do you really want to restart LIMs on all hosts? [y/n] y

Restart LIM on <hpc4111tete> ...... done

Restart LIM on <compute000> ...... done

Restart LIM on <compute001> ...... done

Restart LIM on <compute002> ...... done- Define how NVIDA CUDA jobs are submitted to LSF in $LSF_ENVDIR/lsbatch/cluster-name/configdir/lsb.applications, where cluster-name is the name of your cluster.

Begin Application

NAME = nvjobsh

DESCRIPTION = NVIDIA Shared GPU Jobs

JOB_STARTER = nvjob "%USRCMD"

RES_REQ = select[gpushared>0]

End Application

Begin Application

NAME = nvjobex_t

DESCRIPTION = NVIDIA Exclusive GPU Jobs

JOB_STARTER = nvjob "%USRCMD"

RES_REQ = rusage[gpuexcl_thrd=1]

End Application

Begin Application

NAME = nvjobex2_t

DESCRIPTION = NVIDIA Exclusive GPU Jobs

JOB_STARTER = nvjob "%USRCMD"

RES_REQ = rusage[gpuexcl_thrd=2]

End Application

Begin Application

NAME = nvjobex_p

DESCRIPTION = NVIDIA Exclusive-process GPU Jobs

JOB_STARTER = nvjob "%USRCMD"

RES_REQ = rusage[gpuexcl_proc=1]

End Application

Begin Application

NAME = nvjobex2_p

DESCRIPTION = NVIDIA Exclusive-process GPU Jobs

JOB_STARTER = nvjob "%USRCMD"

RES_REQ = rusage[gpuexcl_proc=2]

End Application- To add the GPU-related application pofiles, issue the following command on the IBM Platform HPC management node.

# badmin reconfig

Checking configuration files ...

No errors found.

Reconfiguration initiated- To enable monitoring GPU related metrics in the IBM Platform HPC Web portal, modify the $PMC_TOP/gui/conf/pmc.conf configuration file by setting the variable ENABLE_GPU_MONITORING equal to Y. To make the change take effect, it is necessary to restart the IBM Platform HPC Web portal server.

# service pmc stop

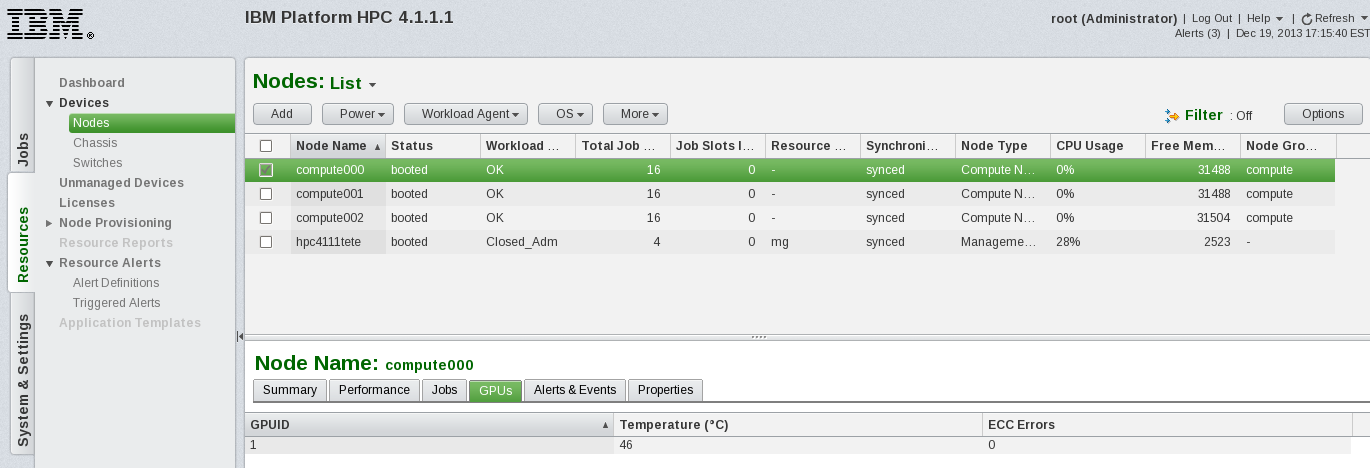

# service pmc start- Within the IBM Platform HPC Web portal, the GPU tab is now present for nodes equipped with NVIDIA GPUs. Browse to Resources > Devices > Nodes and select the GPU tab.

D. Job Submission - specifying GPU resources

The IBM Platform HPC Administration Guide contains detailed steps job submission to GPU resources. This can be found in Chapter 10 in the section titled Submitting jobs to a cluster by specifying GPU resources. Please refer to the IBM Platform HPC Administration Guide for detailed information.

Here we look at a simple example. In Section C. we configured a number of application profiles specific to GPU jobs.

- The list of application profiles can be displayed using the bapp CLI:

# bapp

APP_NAME NJOBS PEND RUN SUSP

nvjobex2_p 0 0 0 0

nvjobex2_t 0 0 0 0

nvjobex_p 0 0 0 0

nvjobex_t 0 0 0 0

nvjobsh 0 0 0 0Furthermore, details regarding a specific profile can be obtained as follows:

# bapp -l nvjobsh

APPLICATION NAME: nvjobsh

-- NVIDIA Shared GPU Jobs

STATISTICS:

NJOBS PEND RUN SSUSP USUSP RSV

0 0 0 0 0 0

PARAMETERS:

JOB_STARTER: nvjob "%USRCMD"

RES_REQ: select[gpushared>0]- To submit a job for execution on a GPU resource, the folloiwng syntax is

used. Note here that the deviceQuery application (previously compiled) is

submitted for execution). The following command is run as user phpcadmin.

The bsub -a option is used to specify the application profile at the time of job submission.

$ bsub -I -a gpushared /usr/local/cuda-5.5/samples/1_Utilities/deviceQuery/deviceQuery

Job <208> is submitted to default queue <medium_priority>.

<<Waiting for dispatch ...>>

<<Starting on compute000>>

/usr/local/cuda-5.5/samples/1_Utilities/deviceQuery/deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "Tesla K20c"

CUDA Driver Version / Runtime Version 5.5 / 5.5

CUDA Capability Major/Minor version number: 3.5

Total amount of global memory: 4800 MBytes (5032706048 bytes)

(13) Multiprocessors, (192) CUDA Cores/MP: 2496 CUDA Cores

GPU Clock rate: 706 MHz (0.71 GHz)

Memory Clock rate: 2600 Mhz

Memory Bus Width: 320-bit

L2 Cache Size: 1310720 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(65536), 2D=(65536, 65536), 3D=(4096, 4096, 4096)

Maximum Layered 1D Texture Size, (num) layers 1D=(16384), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(16384, 16384), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

....

....

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 5.5, CUDA Runtime Version = 5.5, NumDevs = 1, Device0 = Tesla K20c

Result = PASSAppendix A: Example NVIDIA CUDA 5.5 Kit template (buildkit.conf)

# Copyright International Business Machine Corporation, 2012-2013

# This information contains sample application programs in source language, which

# illustrates programming techniques on various operating platforms. You may copy,

# modify, and distribute these sample programs in any form without payment to IBM,

# for the purposes of developing, using, marketing or distributing application

# programs conforming to the application programming interface for the operating

# platform for which the sample programs are written. These examples have not been

# thoroughly tested under all conditions. IBM, therefore, cannot guarantee or

# imply reliability, serviceability, or function of these programs. The sample

# programs are provided "AS IS", without warranty of any kind. IBM shall not be

# liable for any damages arising out of your use of the sample programs.

# Each copy or any portion of these sample programs or any derivative work, must

# include a copyright notice as follows:

# (C) Copyright IBM Corp. 2012-2013.

# Kit Build File

#

# Copyright International Business Machine Corporation, 2012-2013

#

# This example buildkit.conf file may be used to build a NVIDIA CUDA 5.5 Kit

# for Red Hat Enterprise Linux 6.4 (x64).

# The Kit will be comprised of 3 components allowing you to install:

# 1. NVIDIA Display Driver

# 2. NVIDIA CUDA 5.5 Toolkit

# 3. NVIDIA CUDA 5.5 Samples and Documentation

#

# Refer to the buildkit manpage for further details.

#

kit:

basename=kit-CUDA

description=NVIDIA CUDA 5.5 Kit

version=5.5

release=1

ostype=Linux

kitlicense=Proprietary

kitrepo:

kitrepoid=rhels6.4

osbasename=rhels

osmajorversion=6

osminorversion=4

osarch=x86_64

kitcomponent:

basename=component-NVIDIA_Driver

description=NVIDIA Display Driver 319.37

serverroles=mgmt,compute

ospkgdeps=make

kitrepoid=rhels6.4

kitpkgdeps=nvidia-kmod,nvidia-modprobe,nvidia-settings,nvidia-xconfig,xorg-x11-drv-nvidia,xorg-x11-drv-nvidia-devel,xorg-x11-drv-nvidia-libs

postinstall=nvidia/createsymlink.sh

kitcomponent:

basename=component-CUDA_Toolkit

description=NVIDIA CUDA 5.5 Toolkit 5.5-22

serverroles=mgmt,compute

ospkgdeps=gcc-c++

kitrepoid=rhels6.4

kitpkgdeps=cuda-command-line-tools,cuda-core,cuda-core-libs,cuda-extra-libs,cuda-headers,cuda-license,cuda-misc,cuda-visual-tools

kitcomponent:

basename=component-CUDA_Samples

description=NVIDIA CUDA 5.5 Samples and Documentation 5.5-22

serverroles=mgmt,compute

kitrepoid=rhels6.4

kitpkgdeps=cuda-documentation,cuda-samples

kitpackage:

filename=nvidia-kmod-*.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=nvidia-modprobe-*.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=nvidia-settings-*.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=nvidia-xconfig-*.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=xorg-x11-drv-nvidia-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=xorg-x11-drv-nvidia-devel-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=xorg-x11-drv-nvidia-libs-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=nvidia-driver

kitpackage:

filename=cuda-command-line-tools-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-core-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-core-libs-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-extra-libs-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-headers-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-license-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-misc-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-visual-tools-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-toolkit

kitpackage:

filename=cuda-documentation-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-samples

kitpackage:

filename=cuda-samples-*.x86_64.rpm

kitrepoid=rhels6.4

# Method 1: Use pre-built RPM package

isexternalpkg=no

rpm_prebuiltdir=cuda-samplesAppendix B: createsymlink.sh

# Copyright International Business Machine Corporation, 2012-2013

# This information contains sample application programs in source language, which

# illustrates programming techniques on various operating platforms. You may copy,

# modify, and distribute these sample programs in any form without payment to IBM,

# for the purposes of developing, using, marketing or distributing application

# programs conforming to the application programming interface for the operating

# platform for which the sample programs are written. These examples have not been

# thoroughly tested under all conditions. IBM, therefore, cannot guarantee or

# imply reliability, serviceability, or function of these programs. The sample

# programs are provided "AS IS", without warranty of any kind. IBM shall not be

# liable for any damages arising out of your use of the sample programs.

# Each copy or any portion of these sample programs or any derivative work, must

# include a copyright notice as follows:

# (C) Copyright IBM Corp. 2012-2013.

# createsymlink.sh

# The script will produce a symbolic link required by the

# IBM Platform LSF elim for NVIDIA GPUs.

#

#!/bin/sh

LIBNVIDIA="/usr/lib64/nvidia/libnvidia-ml.so"

if [ -a "$LIBNVIDIA" ]

then

ln -s /usr/lib64/nvidia/libnvidia-ml.so /usr/lib64/libnvidia-ml.so

/etc/init.d/lsf stop

/etc/init.d/lsf start

fi

exit 0