SC'19 Recap

Last week was the annual Supercomputing conference, held this year in Denver, and it was its usual whirlwind of big product announcements, research presentations, vendor meetings, and catching up with old colleagues. As is the case every year, SC was both too short and too long; there is a long list of colleagues and vendors with whom I did not get a chance to meet, yet at the same time I left Denver on Friday feeling like I had been put through a meat grinder.

All in all it was a great conference, but it felt like it had the same anticipatory undertone I felt at ISC 2019. There were no major changes to the Top 500 list (strangely, that mysterious 300+ PF Sugon machine that was supposed to debut at ISC did not make an appearance in Denver). AMD Rome and memory-channel Optane are beginning to ship, but it seems like everyone’s got their nose to the grindstone in pursuit of achieving capable exascale by 2021.

As with every major HPC conference, I approached SC this year with the following broad objectives:

<ol><li>Sharing knowledge and ideas by contributing to the technical program and its workshops, tutorials, and BOFs with the goal of getting more momentum behind good ideas and steering research and roadmaps in a direction best aligned with where I think the HPC industry needs to go</li><li>Gathering intelligence across different technologies and market verticals to stay ahead of where technology and the community may be driving as a result of other parallel industries</li><li>Contributing to community development amongst storage and I/O researchers and practitioners with the goal of broadening the community and bringing more people and ideas to the table</li><li>Building and maintaining relationships with individual vendor representatives and peers so that I know to whom I can turn when new opportunities or challenges come up</li></ol>The things I took away from the conference are colored by these goals and the fact that I mostly work in high-performance storage systems design. If I missed any major themes or topics in this recap post, it was likely a reflection of the above goals and perspective.

<h2 id="before">Before the conference</h2>SC’19 started back in the early spring for me since I served on the technical papers committee and co-chaired the Parallel Data Systems Workshop this year. That all amounted to a predictable amount of work throughout the year, but there were two surprises that came up in October with respect to SC that are worth mentioning before we dive into the technical contents of the conference.

<h3>The “I am HPC Guru” campaign</h3>Jim Cownie had the brilliant idea in early October to launch a covert campaign to create “I am HPC Guru” pins for SC, and he enlisted a group of willing members of the HPC Twitter community to pitch in. I was fortunate enough to be invited to participate in the fun, and judging by the reach of the #IAmHPCGuru tag on Twitter during the conference, it was a wild success.

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td> </td></tr><tr><td class="tr-caption" style="font-size: 12.800000190734863px;">An allotment of “I am HPC Guru” pins. People who pitched in also got a commemorative larger-sized pin (shown outside the bag above) which was a calling card for members of the secret society.</td></tr></tbody></table>

</td></tr><tr><td class="tr-caption" style="font-size: 12.800000190734863px;">An allotment of “I am HPC Guru” pins. People who pitched in also got a commemorative larger-sized pin (shown outside the bag above) which was a calling card for members of the secret society.</td></tr></tbody></table>

Hats off to Jim for conceiving this great idea, seeing through the design and shipment of the pins, and being so inclusive with the whole idea. There are now hundreds of HPC_Guru pins all over the world thanks to Jim’s efforts (and a couple dozen still with me here in California…), and I think it was a really positive way to build the Twitter-HPC community.

<h3>The new job</h3>Life also threw me a bit of a curve ball in late October when I took on a new set of responsibilities at NERSC and changed from contributing to an R&D group to leading an operational storage team. This meant that, in addition to all the pre-conference commitments I had made with an eye towards longer-term storage technology strategy, I suddenly had to contextualize my goals with respect to a completely new role in tactical planning and deployment.

Whereas I’ve historically written off sales-oriented meetings at SC, having good relationships with vendor sales teams in addition to their engineers and product managers is now an essential component of my new position. As a result of wearing these two hats instead of one, the number of hard commitments I had over the course of the conference about doubled over what it usually had been. About half of these meetings were private (and not things about which I could write), and they also reduced the time I could’ve otherwise getting into the weeds about upcoming technologies.

Because the conference was so broken up into private and public meetings for me this year, a chronological recounting of the conference (as I did for my SC’18 recap) would be full of odd gaps and not make a whole lot of sense. Instead, I will focus around a few of the juiciest topics I took away from the conference:

<ol><li>High-level trends that seemed to pop up repeatedly over the week</li><li>Intel’s disclosures around the Aurora/A21 system</li><li>Outcomes from the 2019 Parallel Data Systems Workshop (PDSW 2019)</li><li>The Perlmutter all-NVMe storage node architecture</li><li>DAOS and the 2019 DAOS User Group meeting</li><li>Everything else</li></ol><div>

</div>

High-level trends

It’s difficult to group together all of the disparate things I heard and learned over the week into crisp bundles that I would consider emerging trends, but there were a few broad topics that kept popping up that suggested the following:

#1 - Memory-channel 3D XPoint is now out in the wild at sufficient scale that a picture is beginning to form around where it fits in the I/O stack. The NEXTGenIO project and Intel DAOS both demonstrated the performance achievable when 3D XPoint is integrated into larger systems this year, and the acceleration it offers can be staggering when a sensible software framework is built upon around persistent memory to bridge it with other media (like flash) and higher-level functionality (like parallel storage). Michèle Weiland and Adrian Jackson presented their successes with the NEXTGenIO project throughout the week, most notably in the technical papers track (see “An early evaluation of Intel’s Optane DC persistent memory module and its impact on high-performance scientific applications”) and across several smaller events (e.g., Adrian presented performance results, detailed in his EPCC blog post, at the Multi-Level Memory BOF). DAOS also made a splash on IO-500; more on this below.

#2 - The I/O ecosystem developed in preparation for the manycore era is making the transition from pure research to practical engineering effort. As the first generation of 7nm CPUs hit the market with KNL-like core counts and massive scale-up GPU node architectures are being announced by every major HPC silicon provider, latency-hiding techniques for I/O are becoming a hot topic. Asynchronous I/O—that is, techniques that allow an application to continue computing while a write I/O operation is still happening—came up a few times, and this technique is also moving up in the software stack from system software (such as DAOS, WekaIO, and VAST) into middleware (MPI-IO and HDF5). I touch on this in the PDSW section below.

#3 - Innovation in HPC storage is moving away from the data plane and towards full data life cycle. Whereas focus in HPC I/O has traditionally revolved around making I/O systems as fast as possible, research and product announcements this year seemed to gravitate towards data management—that is, how to manage the placement of data before, during, and after I/O. Proprietary frameworks for data migration, policy management, tiering, and system-level analytics and intelligence (backed by serious vendor investment; see Cray ClusterStor Data Services and DDN STRATAGEM) are popping up across the storage appliance market as a differentiator atop open-source software like Lustre, and research around applying AI to optimize data placement is maturing from novel research into product engineering.

#4 - Scientific workflows—and the parallels they have with enterprise and hyperscale markets—are starting to be taken seriously by technology providers. Vendors have begun to take ownership of the data movement challenges that exist between bursts of compute-intensive jobs. Advances aimed at edge computing are becoming surprisingly relevant to HPC since decentralized data that is far away from compute is, in a sense, how HPC has done storage for decades. Whether they be sensors distributed across billions of cell phones, thousands of non-volatile storage media distributed across an exascale computing system, or detectors deployed at giant telescopes relying on a supercomputer for image processing, there are a common set of data management, movement, and remote processing challenges whose solutions can be applied across the board.

<h2 id="splash">Intel’s big splash</h2>Following on their big system-level disclosures at ISC’19, Intel’s disclosure of the ALCF exascale system node architecture and the unveiling of their software strategy seemed to be the biggest splash of SC’19. I was not actually at the Intel DevCon keynote where Raja Koduri made the announcements, but his slides on Xe and oneAPI are available online.

The node architecture is, at a glance, very similar to the Summit node architecture today:

<blockquote class="twitter-tweet"><div dir="ltr" lang="en">Aurora #supercomputer @argonne will have nodes with 2 Sapphire Rapids CPUs and 6 Ponte Vecchio GPUs with unified memory architecture#SC19 #HPC #AI #Exascale #GPU pic.twitter.com/HTGMnYh7AY</div>

— HPC Guru (@HPC_Guru) November 18, 2019</blockquote>From the slide and accompanying discussion on Twitter, there was quite a lot unveiled about the node architecture. Each node will have:

<ul><li>Two Sapphire Rapids Xeons (which appear to have 8 channels of DDR in the aforementioned slide) and six Ponte Vecchio Intel GPUs</li><li>A CXL-based “Xe Link” router provides all-to-all connectivity between the GPUs, presumably comparable to (but more standards-based than) NVLink/NVSwitch, for a unified memory space</li><li>Eight Slingshot NIC ports per node, which is 1.6 Tbit/sec of injection bandwidth</li><li>A “Rambo Cache” that sits between HBM, GPU, and CPU that presumably reduces NUMA effects for hot data that is being touched by many computing elements</li><li>A “matrix engine” (which sounds an awful lot like NVIDIA’s tensor cores) in each GPU</li></ul><div>This was an extremely daring release of information, as Intel has now publicly committed to a 7nm GPU part (comparable to TSMC’s 5nm process), along with a high-yield EMIB process (their chiplet interconnect for HBM integration) and Foveros (their 3D die stacking for Rambo integration), in 2021.</div>

|

| SYCL vs. Kokkos vs. native on NVIDIA and Intel architectures |

These early results are promising, and with the exception of KNL, the SYCL ecosystem is already showing promise as a performance-portable framework. The same is generally true for more complex computational kernels as well, as presented by Istvan Reguly from Pázmány Péter Catholic University:

|

| Performance portability figure of merit for a complex kernel using different performance-portable parallel runtimes. |

Intel's choice to back an open standard rather than develop its own proprietary APIs for each accelerator type was a very smart decision, as it looks like they are already making up lost ground against NVIDIA in building a robust software ecosystem around their accelerator technologies. The fact that these presentations were given by application scientists, not Intel engineers, really underscores this.

<h2 id="pdsw">PDSW 2019</h2>This year I had the great honor of co-chairing the Parallel Data Systems Workshop, the premiere data and storage workshop at SC, along with the esteemed Phil Carns (creator of Darshan and PVFS2/OrangeFS, among other things). We tried to broaden the scope of the workshop to be more inclusive of “cloudy” storage and data topics, and we also explicitly tried to build the program to include discussion about data management that ran tangential to traditional HPC-focused storage and I/O.

The proceedings are already online in an interim location hosted by ACM, and the full proceedings will be published by IEEE TCHPC. Slides are available on the PDSW website, and I tried to tag my realtime thoughts using #pdsw19 on Twitter.

<h3>Alluxio Keynote</h3>Our keynote speaker was Haoyuan Li, founder of Alluxio, who gave a brilliant talk about the data orchestration framework he developed at AMPLab and went on to commercialize. It is an abstraction that stitches together different storage resources (file systems, object stores, etc) into a single namespace that applications can use to read and write data in a way that hides the complexity of tiered storage. It was designed towards the beginning of the “Big Data revolution” with a specific eye towards providing a common interface for data accessibility; by writing an application against the Alluxio API, it would be made future-proof if the HDFS or S3 APIs fizzled since Alluxio normalizes the specific API and semantics of a native storage interface from user applications.

Had something like this existed in the early days of HPC, there’s a good chance that we would not be stuck using POSIX I/O as the least common denominator for data access. That said, Alluxio does solve a slightly easier problem in that it targets analytics workloads that are read-intensive—for example, it does not provide a means for applications to do random writes, and so it provides only a subset of the full semantics that some more general-purpose I/O interfaces (such as file access) may provide. In making this trade-off though, it is able to aggressively cache data from any storage backend in a distributed memory space, and Alluxio has a configurable cache eviction policy for predictable workflows.

In describing the motivation for the Alluxio design, Haoyuan had some interesting insights. In particular, he pointed out that there is a growing movement away from the hyperconverged hardware architecture that motivated Hadoop and HDFS:

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

The whole “move compute to where the data is!” model for Hadoop has always struck me as rather fanciful in practice; it only works in single-tenant environments where there’s no chance of someone else’s compute already existing where your data is, and it imposes a strict coupling between how you scale data and analytics. As it turns out, the data analytics industry is also waking up to that, and as Haoyuan’s slide above shows, separating storage from compute gives much more flexibility in how you scale compute with respect to data, but at the cost of increased complexity in data management. The whole point of Alluxio is to minimize that cost of complexity by making data look and feel local by (1) providing a single namespace and API, and (2) using distributed memory caching to make data access perform as well as if compute and memory were colocated.

This is a bit ironic since HPC has been disaggregating storage from compute for decades; HPC systems have tended to scale compute capability far faster than storage. However, the HPC community has yet to address the added complexity of doing this, and we are still struggling to simplify storage tiering for our users. This is only getting worse as some centers slide back into hyperconverged node designs by incorporating SSDs into each compute node. This causes different tiers to spread data across multiple namespaces and also further complicate data access since the semantics across those namespaces differ. For example, it’s not sufficient to know that

<ul><li>/local is the fastest tier</li><li>/scratch is less fast</li><li>/home is slow</li></ul>since

<ul><li>/local is only coherent with other processes sharing the same physical compute node</li><li>/scratch is globally coherent</li><li>/home is globally coherent</li></ul>Alluxio is not the solution to this problem at present because it is optimized for write-once, read-many workloads whereas HPC does have to support random writes. That said, HPC storage systems that incorporate the same design goals as Alluxio (connecting many types of storage under a single namespace, providing a restricted set of semantics, and applying aggressive caching to deliver local-like performance) hold a lot of promise. Perhaps it’s no surprise that every serious parallel file system on the market is beginning to implement features like this—think Lustre File-Level Redundancy (FLR) and Persistent Client Caching (LPCC), Spectrum Scale AFM, and the core two-tier design of WekaIO.

Haoyuan also presented a few case studies that showcased the ability of Alluxio to ease the transition from on-premise infrastructure (like Hadoop with HDFS) to hybrid cloud (e.g., run Presto across datasets both in older on-prem HDFS and newer S3 buckets). It seems to be very fashionable to run analytics directly against data in object stores in industry, and Alluxio essentially gives such data more dynamism by being the place where active data can be staged for processing on demand. Because it is a stateless orchestration layer rather than a storage system itself, Alluxio also seems nicely compatible with dynamic provisioning of compute resources. In this sense, it may be an interesting internship project to see if Alluxio could be deployed on an HPC system to bridge a large data analytics job with an off-system object store. Get in touch with me if you know a student who may want to try this!

<h3>Asynchronous I/O</h3>Middleware for asynchronous I/O came up in two different papers this year. The first, “Enabling Transparent Asynchronous I/O using Background Threads” by Tang et al., described a new pluggable runtime for HDF5 that processes standard HDF5 I/O requests asynchronously. It does this by copying I/O requests and their metadata into a special buffer, putting those requests on a queue that is managed by the asynchronous runtime, building a directed graph of all requests’ dependencies, and dispatching I/Os alongside regular application execution using a lightweight (Argobots-based) asynchronous worker pool.

What this amounts to is that a standard HDF5 write call wouldn’t block until the I/O has been committed to disk somewhere; instead, it returns immediately after the async runtime makes a copy of the data to be written into its own private memory buffer. The application is then free to continue computing, while an Argobots thread begins buffering and dispatching outstanding asynchronous I/O calls. The performance that results from being able to overlap I/O with computation is remarkable:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">I/O speedup at scale as a result of the asynchronous runtime backend for HDF5 presented by Tang et al.</td></tr></tbody></table>

</td></tr><tr><td class="tr-caption" style="text-align: center;">I/O speedup at scale as a result of the asynchronous runtime backend for HDF5 presented by Tang et al.</td></tr></tbody></table>

What’s more impressive, though, is that this backend is almost entirely transparent to the user application; in its simplest form, it can be enabled by setting a single environment variable.

Later in the day, Lucho Ionkov presented a much more novel (research-y?) asynchronous I/O runtime in his paper, “A Foundation for Automated Placement of Data” which glued together DRepl (an abstraction layer between scientific applications and storage architectures, vaguely similar to what Alluxio aims to do), TCASM (a Linux kernel modification that allows processes to share memory), and Hop (an expressive key-value store with tunable performance/resilience requirements). The resulting runtime provides a high-level interface for applications to express I/O and data placement as a series of attach, publish, and re-attach operations to logical regions of memory. The runtime then manages the actual data movement (whether it be between nodes or to persistent storage) asynchronously.

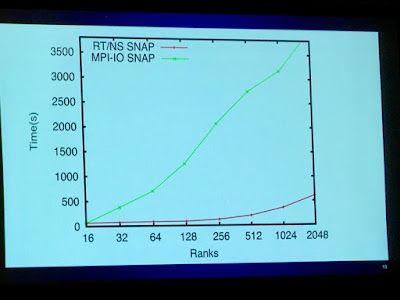

Again, the net result in speedup as the problem size scales up is impressive:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">I/O speedup at scale using the asynchronous I/O runtime presented by Iokov in Otstott et al.</td></tr></tbody></table>As with the asynchronous HDF5 paper, performance gets better with scale as the increasing costs of doing I/O at scale are amortized by overlapping it with computation. In contrast to HDF5 though, this runtime comes with a completely new application API, so one would need to convert an application’s critical I/O routines to use this framework instead of POSIX I/O. The runtime is also pretty heavyweight in that it requires a separate global data placement “nameserver,” a custom Linux kernel, and buy-in to the new memory model. In that sense, this is a much more research-oriented framework, but the ideas it validates may someday appear in the design of a fully framework that incorporates both an application runtime and a storage system.

</td></tr><tr><td class="tr-caption" style="text-align: center;">I/O speedup at scale using the asynchronous I/O runtime presented by Iokov in Otstott et al.</td></tr></tbody></table>As with the asynchronous HDF5 paper, performance gets better with scale as the increasing costs of doing I/O at scale are amortized by overlapping it with computation. In contrast to HDF5 though, this runtime comes with a completely new application API, so one would need to convert an application’s critical I/O routines to use this framework instead of POSIX I/O. The runtime is also pretty heavyweight in that it requires a separate global data placement “nameserver,” a custom Linux kernel, and buy-in to the new memory model. In that sense, this is a much more research-oriented framework, but the ideas it validates may someday appear in the design of a fully framework that incorporates both an application runtime and a storage system.

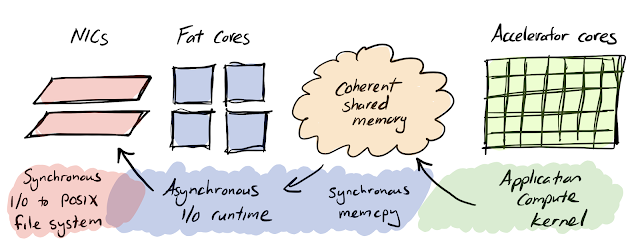

Why is this important? These asynchronous I/O runtimes are making a lot more sense in the era of heterogeneous computing where accelerators (think GPUs) really aren’t good at driving a full kernel-based I/O pipeline. Instead of running a full I/O stack and enforcing strict consistency (i.e., serializing I/O) on a lightweight accelerator core, having an asynchronous runtime running on a fat core that simply copies an I/O buffer from accelerator memory to slower memory before releasing program control back to the accelerator allows the accelerator tp spend less time doing what it’s terrible at doing (ordering I/O operations) and more time computing. At the same time, the fat core that is running the asynchronous I/O runtime can then operate on that copied I/O buffer on its own time, reorder and serialize operations to ensure consistency, and jump into and out of the kernel to enforce file permissions without interrupting the accelerator:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">Sketch of how an asynchronous I/O runtime might map to a heterogeneous node architecture</td></tr></tbody></table>

</td></tr><tr><td class="tr-caption" style="text-align: center;">Sketch of how an asynchronous I/O runtime might map to a heterogeneous node architecture</td></tr></tbody></table>

Ron Oldfield did raise a really great consideration during PDSW about this though: at the end of the day, the asynchronous I/O runtime still has to share network resources with the application’s message passing runtime (e.g., MPI). He alluded to work done a decade ago that found that asynchronous I/O was often stomping on MPI traffic since both MPI and I/O could happen at the same time. Without some kind of awareness or coordination between the asynchronous I/O runtime and the application communication runtime, this sort of scheme is prone to self-interference when running a real application.

Given this, the right place to integrate an asynchronous I/O runtime might be inside the message passing runtime itself (e.g., MPI-IO). This way the asynchronous I/O scheduler could consider outstanding asynchronous messages it must pass as well and be smart about dispatching too many competing network transfers at the same time. Unfortunately this then places a complex burden of serialization and synchronization on the runtime, and this starts to look a lot like just throwing messages at the NIC and letting it figure out the correct ordering. The principal advantage here would be that the runtime has a lot more visibility into user intent (and may have more spare processing capacity if most of the application time is spent on an accelerator), so it could afford to be smarter about how it builds its dependency graph.

<h3>Analytics for Runtime and Operations</h3>No computing-related workshop would be complete without a smattering of artificial intelligence and machine learning, and PDSW was no different this year. Two papers were presented that attempted to use machine learning to predict parallel I/O performance in slightly different ways.

Suren Byna presented “Active Learning-based Automatic Tuning and Prediction of Parallel I/O Performance” where the authors developed an approach for autotuning parallel I/O (specifically using MPI-IO hints and Lustre striping parameters) using active learning to predict the optimal values for their tuning parameters. They used two different approaches, and the faster one uses predicted performance to infer optimal tuning values. Given how many factors actually come to play in parallel I/O performance on production systems, their model was able to predict I/O performance quite well under a range of I/O patterns:

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

Bing Xie et al presented “Applying Machine Learning to Understand Write Performance of Large-scale Parallel Filesystems” which pursued a similar line of work—using machine learning to predict I/O performance—but with a slightly different goal. Xie’s goal was to identify the factors which most strongly affect predicted I/O performance, and she found that write performance was most adversely affected by metadata load and load imbalance on Blue Gene/Q and GPFS, whereas Cray XK7 and Lustre were more affected by aggregate file system load and load imbalance. This system-centric work laid out a more sophisticated blueprint for identifying causal relationships between poor I/O performance and system-level health events, and I think applying these approaches to the dataset I published last year with my Year in the Life of a Parallel File System paper might identify some interesting emergent relationships between bad performance and the subtle factors to which they can be attributed.

Why is this important? Industry is beginning to take notice that it is no longer sufficient to just report there here-and-now of how parallel file systems are behaving, and more sophisticated analytics engines are being co-deployed with very large systems. For example, the Summit system at Oak Ridge made a splash in October by announcing the real-time analytics engine that was implemented on top of it, and Cray View is a similar analytics-capable engine built atop Lustre that Cray offers as a part of its ClusterStor lineup. I’m not sure if DDN has something comparable, but their recent purchase of Tintri and its robust, enterprise-focused analytics engine means that they hold IP that can be undoubtedly be applied to its HPC-focused storage product portfolio.

Being able to predict performance (and the conditions that cause it to degrade!) is the holy grail of parallel I/O systems management, and it’s a sure bet that all the HPC storage vendors are watching research in this area very closely to see what ideas they can pluck from the community to add value to their proprietary analytics engines. The fact that AI is being applied to production system data and yielding useful and actionable outcomes gives legs to this general idea of AI for self-driving systems. The talks at PDSW this year were only demonstrations, not hardened products, but these ad-hoc or small-scale demonstrations are moving us in the right direction.

<h3>My Talk on Data Motion</h3>I also coauthored and presented a paper at PDSW this year that was an exploratory study of how we can understand data movement throughout an entire data center. The goal of the entire paper, “Understanding Data Motion in the Modern HPC Data Center,” was to generate this diagram that shows how much data flows between different systems at NERSC:

<div class="separator" style="clear: both; text-align: center;"></div>

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

I won’t recount the technical content of the talk here, but the paper is open access for those interested. The essence of the study is that we showed that it is possible to examine data motion beyond the context of individual jobs and begin tying together entire workflows, but there’s a lot of supporting work required to shore up the tools and telemetry from which this analysis draws. The paper was very much a long-form work in progress, and I’d be interested in hearing from anyone who is interested in pursuing this work further.

<h2 id="e1kf">Scale-up highly available NVMe hardware</h2>Although it didn’t make a many headlines (as storage rarely does), Cray announced its new ClusterStor E1000 platform shortly before SC and had some of their E1000-F all NVMe enclosures on display at a few booths. I normally don’t care too much about storage enclosures (it’s all just sheet metal, right?), but this announcement was special to me because it is the hardware platform that is going into NERSC’s Perlmutter system in 2020, and I’ve been involved with the different iterations of this hardware design for over a year now.

It’s very gratifying to see something start out as a CAD drawing and a block diagram and grow up into actual hardware:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">The E1000-F all-NVMe enclosure</td></tr></tbody></table>

</td></tr><tr><td class="tr-caption" style="text-align: center;">The E1000-F all-NVMe enclosure</td></tr></tbody></table>

Torben Kling Petersen gave a talk at the Exhibitor Forum disclosing the details of the hardware design on behalf of Cray, and it looks like they’ve made just about everything surrounding the E1000 public:

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

The foundation for this platform is the E1000-F high-availability enclosure as shown in the above slide. It has two separate Rome-based servers (“controllers”) and 24 U.2 NVMe slots capable of PCIe Gen4. Each Rome controller has slots for up to three 200 Gbit NICs; doing the math, this gives a very nicely balanced design that is implemented entirely without PCIe switches:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">Cartoon block diagram for one half of the E1000-F chassis. Note that the NVMe read rates (violet text) are assumed based on Samsung PM1733 specs and performance projections that Petersen presented. Also note that each NVMe drive is 2x2 PCIe Gen4 with multipath to the other Rome controller (not shown).</td></tr></tbody></table>I visited the booth of the ODM with whom Cray worked to develop this node design and was fortunate enough to meet the node architects from both sides who gave me a really helpful breakdown of the design. Physically, the 2U chassis is laid out something like this:

</td></tr><tr><td class="tr-caption" style="text-align: center;">Cartoon block diagram for one half of the E1000-F chassis. Note that the NVMe read rates (violet text) are assumed based on Samsung PM1733 specs and performance projections that Petersen presented. Also note that each NVMe drive is 2x2 PCIe Gen4 with multipath to the other Rome controller (not shown).</td></tr></tbody></table>I visited the booth of the ODM with whom Cray worked to develop this node design and was fortunate enough to meet the node architects from both sides who gave me a really helpful breakdown of the design. Physically, the 2U chassis is laid out something like this:

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

Just about everything is both hot-swappable and fully redundant. The entire system can be powered and cooled off of a single 1.2 kW(?) power supply, and all the fans are hot-swappable and configured in a 5+1:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">Fans are all individually replaceable and configured in 5+1. You can also see the NVMe backplanes, attached to an active midplane (not shown), through the open fan slot.</td></tr></tbody></table>

</td></tr><tr><td class="tr-caption" style="text-align: center;">Fans are all individually replaceable and configured in 5+1. You can also see the NVMe backplanes, attached to an active midplane (not shown), through the open fan slot.</td></tr></tbody></table>

All the fans are on the same pulse-width modulator (PWM), so they all operate at the same speed and provide even airflow as long as they are properly powered. My recollection from what the architect told me is that the PWM signal is provided by an FPGA on the midplane which also handles drive power-up. Because there is only a single midplane and this power/cooling controller lives on it, this power/cooling FPGA is also configured redundantly as 1+1. Thus, while the midplane itself is not redundant or field-replaceable, the active components on it are, and it would take physical damage (e.g., someone punching a hole through it and breaking the PCB traces) to knock the whole chassis offline.

Each chassis has two independent node boards that are hot-pluggable and self-contained:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">One of the E1000-F node sleds with its cover popped off at the Cray booth</td></tr></tbody></table>Each node board is wrapped in a sheet metal sled and has a screwed-on lid. The whole node sled was designed by the ODM to be a field-replaceable unit (FRU), so doing something like a DIMM swap does require a screwdriver to remove the top cover. However it’s ultimately up to OEMs to decide how to break down FRUs.

</td></tr><tr><td class="tr-caption" style="text-align: center;">One of the E1000-F node sleds with its cover popped off at the Cray booth</td></tr></tbody></table>Each node board is wrapped in a sheet metal sled and has a screwed-on lid. The whole node sled was designed by the ODM to be a field-replaceable unit (FRU), so doing something like a DIMM swap does require a screwdriver to remove the top cover. However it’s ultimately up to OEMs to decide how to break down FRUs.

The ODM had a bare controller board at its booth which looks like this:

<div class="separator" style="clear: both; text-align: center;"></div>

| E1000-F bare controller board |

There are two M.2 PCIe Gen4 slots for mirrored boot drives and a pair of big hot-plug block connectors in the front of the board for redundant power and 48 lanes of PCIe Gen4 for the 24x U.2 drives hanging off the midplane. There’s a single riser slot for two standard HHHL PCIe add-in cards where two NICs plug in, and a third OCP-form factor slot where the third NIC can slot in. The rear of the controller sled shows this arrangement:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">Rear view of a single Rome controller</td></tr></tbody></table>It looks like there’s a single RJ45 port (for LOM?), a power and reset button, a single USB-3, and a mini DisplayPort for crash carting.

</td></tr><tr><td class="tr-caption" style="text-align: center;">Rear view of a single Rome controller</td></tr></tbody></table>It looks like there’s a single RJ45 port (for LOM?), a power and reset button, a single USB-3, and a mini DisplayPort for crash carting.

When Cray announced the E1000-F, HPCwire ran a block diagram of the complete chassis design that suggested that heartbeating would be done through a non-transparent bridge (NTB) implemented on the AMD Rome host interface. This was a little worrisome since AMD has yet to release the proper drivers to enable this NTB for Linux in a functional way; this simple fact is leading other ODMs towards a more conservative node design where a third-party nonblocking PCIe switch is added simply to provide a functioning NTB. When I asked the architect about this, though, he revealed that the E1000-F also has an internal gigabit Ethernet loop between both controllers for heartbeating which completely obviates the need to rely on any NTB for failover.

Another interesting thing I learned while talking to the E1000-F designers is that the power supply configuration gives a lot of runway for the overall system design:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">One of the two power supply sleds for the E1000-F chassis. Lots of free real estate remains and is currently occupied by bus bars.</td></tr></tbody></table>The current power supply is (I believe) ~1200 W, and the carrier sled on which it is mounted is mostly empty space taken up by two fat bus bars that reach all the way to the front of it. In leaving all of this space in the sled, it will be fully possible to build a physically compatible PSU sled that delivers significantly more power to the U.2 NVMe drives and host controllers if the power consumption of the controllers or the NVMe drives increases in the future. The ODM confirmed that the cooling fans have similar headroom and should allow the whole enclosure to support a higher power and thermal load by just upgrading the power and controller FRUs.

</td></tr><tr><td class="tr-caption" style="text-align: center;">One of the two power supply sleds for the E1000-F chassis. Lots of free real estate remains and is currently occupied by bus bars.</td></tr></tbody></table>The current power supply is (I believe) ~1200 W, and the carrier sled on which it is mounted is mostly empty space taken up by two fat bus bars that reach all the way to the front of it. In leaving all of this space in the sled, it will be fully possible to build a physically compatible PSU sled that delivers significantly more power to the U.2 NVMe drives and host controllers if the power consumption of the controllers or the NVMe drives increases in the future. The ODM confirmed that the cooling fans have similar headroom and should allow the whole enclosure to support a higher power and thermal load by just upgrading the power and controller FRUs.

This point is important because the performance of PCIe Gen4 SSDs are actually capped by their power consumption—if you look at product sheets for ruler SSDs (M.2, NF1, and E1.S), you will find that their performance is universally lower than their U.2 and HHHL variants due to the fact that the ruler standards limit power to 8-12W compared to U.2/HHHL’s ~25W. This E1000-F chassis is designed as-is for 25W U.2 drives, but there are already proposals to push individual SSD power up to 40W and beyond. Given this trend and the high bandwidth available over a PCIe Gen4 x4 connector, it’s entirely possible that there will be a demand for higher-power NVMe enclosures as Gen4 matures and people want to drive Gen4 NVMe at line rate.

<h2 id="daos">DAOS User Group</h2>The 2019 DAOS User Group was held on Wednesday in a hotel adjacent to the main convention center. Contrary to previous years in which I attended, this meeting felt like a real user group; there were presenters from several different organizations, none of whom directly contribute to or are contractual customers of DAOS. There were also real performance data which largely centered around the insanely high IO-500 benchmark score that DAOS posted earlier in the week:

<table align="center" cellpadding="0" cellspacing="0" class="tr-caption-container" style="margin-left: auto; margin-right: auto; text-align: center;"><tbody><tr><td style="text-align: center;"> </td></tr><tr><td class="tr-caption" style="text-align: center;">Bandwidth spread on the IO-500’s IOR test suite</td></tr></tbody></table>These numbers are using a pretty modest server environment and client count (24 DAOS servers, 26 client nodes, 28 ranks per client, dual-rail OPA100) and use the native DAOS API. What I didn’t snap a photo of are the crazy metadata rates which posted a geometric mean of 4.7 million IOPS; by comparison, the 250 PB Alpine file system attached to the Summit supercomputer at Oak Ridge posted 1.2 million IOPS using more than 500 clients. To the extent that it was meant to address the IOPS limitations intrinsic to traditional parallel file systems, the DAOS design is looking like a resounding success.

</td></tr><tr><td class="tr-caption" style="text-align: center;">Bandwidth spread on the IO-500’s IOR test suite</td></tr></tbody></table>These numbers are using a pretty modest server environment and client count (24 DAOS servers, 26 client nodes, 28 ranks per client, dual-rail OPA100) and use the native DAOS API. What I didn’t snap a photo of are the crazy metadata rates which posted a geometric mean of 4.7 million IOPS; by comparison, the 250 PB Alpine file system attached to the Summit supercomputer at Oak Ridge posted 1.2 million IOPS using more than 500 clients. To the extent that it was meant to address the IOPS limitations intrinsic to traditional parallel file systems, the DAOS design is looking like a resounding success.

According to the speaker, the metadata performance of this IO-500 run was not limited by any server-side resources, so adding more clients (like WekaIO’s top-scoring run with 345 clients) could have pushed this number higher. It was also stated that the staggering IOR read performance was limited by the aggregate Optane DIMM bandwidth which is a testament to how highly optimized the data path is.

<h3>Actually using DAOS</h3>This is all using the DAOS native API though, and unless you intend to rewrite all your open()s and write()s as daos_pool_connect() + daos_cont_open() + daos_array_open()s and daos_array_write()s, it’s hard to tell what this really means in terms of real-world performance. Fortunately there was a great set of talks about the DAOS POSIX compatibility layer and related middleware. I described the POSIX middleware a little in my recap of ISC’19, but it’s much clearer now exactly how a POSIX application may be adapted to use DAOS. Ultimately, there are three options that DAOS provides natively:

<ul><li>libdfs, which is a DAOS library that provides a POSIX-like (but not POSIX-compatible) API into DAOS. You still have to connect to a pool and open a container, but instead of reading and writing to arrays, you read and write arbitrary buffers to byte offsets within file-like objects. These objects exist in a hierarchical namespace, and there are functions provided by libdfs that map directly to POSIX operations like mkdir, rmdir, statfs, etc. Using libdfs, you would still have to rewrite your POSIX I/O calls, but there would be a much smaller semantic gap since POSIX files and directories resemble the files and directories provided by libdfs. A great example of what libdfs looks like can be found in the IOR DFS backend code.</li><li>dfuse, which is a FUSE client written on top of libdfs. With this, you literally get a file system mount point which POSIX applications can interact with natively. Because this uses FUSE though, such accesses are still generating system calls and memory copies which come with steep latency penalties.</li><li>libioil, which is a POSIX interception library. This is what you’d LD_PRELOAD in front of a standard application, and it does the remapping of genuine POSIX API calls into libdfs-native calls without ever going through the kernel.</li></ul>

<div>Cedric Milesi from HPE presented benchmark slides that showed that using the DFS (file-based) API over the native (array-based) API has no effect on performance:</div>

|

| Performance scaling of the native DAOS API (which encodes array objects) to the DAOS DFS API (which encodes file and directory objects). No discernible performance difference. |

<div>Thus, there is no performance difference whether you treat DAOS like an array store (its original design) or a file/directory store (through the libdfs API) as far as bandwidth is concerned. This is excellent news, as even though libdfs isn’t a drop-in replacement for POSIX I/O, it implements the POSIX data model (data is stored as streams of bits) which is a more comfortable look and feel for a storage system than storing typed arrays. And since libioil is a shim atop libdfs, the above performance data suggests that POSIX applications won’t pay significant bandwidth overheads by preloading the POSIX intercept library to get DAOS compatibility out of the box.</div>

- It implicitly confirms that the verbs provider for libfabric not only works, but works well. Recall that the Intel testbed from which IO-500 was run used Intel OmniPath 100, whereas the Argonne testbed uses a competitor's fabric, InfiniBand.

- Single-stream performance of DAOS using the dfuse interface is 450 MB/sec which isn't terrible. For comparison, single-stream performance of Lustre on Cray Aries + FDR InfiniBand is about the same.

- Using the libioil POSIX interface dramatically increases the single-stream performance which shines a light on how costly using the Linux VFS kernel interface (with FUSE on top) really is. Not using FUSE, avoiding an expensive context switch into kernel mode, and avoiding a memcpy from a user buffer into a kernel buffer gives a 3x performance boost.

The burgeoning DAOS community

Everything else

Technical tidbits - the Cray Shasta cabinet

|

| The front end of the new Cray Shasta compute cabinet |

|

| The back end of the new Cray Shasta compute cabinet |

|

| Cray Slingshot switch blade and Cray chassis management module |

Less-technical tidbits

Staying organized

- For the Parallel I/O in Practice tutorial I co-presented, I brought my laptop so that all four presenters could project from it and I could use my iPad for keeping realtime notes.

- For PDSW, I brought my laptop just in case, knowing that I would be in the same room all day. I wound up presenting from it simply because it provided a better viewing angle from the podium; the room arrangements in Denver were such that it was impossible for a speaker at the podium to see the slides being projected, so he or she would have to rely on the device driving the projector to tell what content was actually being projected.