VAST Data's storage system architecture

VAST Data, Inc, an interesting new storage company, unveiled their new all-flash storage system today amidst a good amount of hype and fanfare. There’s no shortage of marketing material and trade press coverage out there about their company and the juiciest features of their storage architecture, so to catch up on what all the talk has been about, I recommend taking a look at

<div><ul><li>The VAST “Universal Storage” datasheet</li><li>The Next Platform’s article, “VAST Data Clustered Flash Storage Bans The Disk From The Datacenter”</li><li>Chris Mellor’s piece, “VAST Data: The first thing we do, let’s kill all the hard drives”</li></ul></div>

The reviews so far are quite sensational in the literal sense since VAST is one of very few storage systems being brought to market that have been designed from top to bottom to use modern storage technologies (containers, NVMe over Fabrics, and byte-addressable non-volatile memory) and tackle the harder challenge of file-based (not block-based) access.

In the interests of grounding the hype in reality, I thought I would share various notes I've jotted down based on my understanding of the VAST architecture. That said, I have to make a few disclaimers up front:

- I have no financial interests in VAST, I am not a VAST customer, I have never tested VAST, and everything I know about VAST has come from just a few conversations with a limited number of people in the company. This essentially means I have no idea what I'm talking about.

- I do not have any NDAs with VAST and none of this material is confidential. Much of it is from public sources now. I am happy to provide references where possible. If you are one of my sources and want to be cited or credited, please let me know.

- These views represent my own personal opinions and not those of my employer, sponsors, or anyone else.

With that in mind, what follows is a semi-coherent overview of the VAST storage system as I understand it. If you read anything that is wrong or misguided, rest assured that it is not intentional. Just let me know and I will be more than happy to issue corrections (and provide attribution if you so desire).

(Update on May 12, 2020: There is now an authoritative whitepaper on how VAST works under the hood on the VAST website. Read that, especially "How It Works," for a better informed description than this post.)

Relevant Technologies

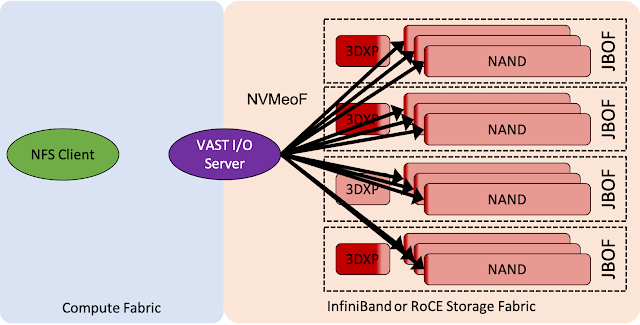

A VAST storage system is comprised of two flavors of building blocks:

- JBOFs (VAST calls them "d boxes" or "HA enclosures"). These things are what contain the storage media itself.

- I/O servers (VAST calls them "cnodes," "servers," "gateways" or, confusingly, "compute nodes"). These things are what HPC cluster compute nodes talk to to perform I/O via NFS or S3.

Tying these two building blocks together is an RDMA fabric of some sort--either InfiniBand or RoCE. Conceptually, it would look something like this:

For the sake of clarity, we'll refer to the HPC compute nodes that run applications and perform I/O through an NFS client as "clients" hereafter. We'll also assume that all I/O to and from VAST occurs using NFS, but remember that VAST also supports S3.

JBOFs

JBOFs are dead simple and their only job is to expose each NVMe device attached to them as an NVMe over Fabrics (NVMeoF) target. They are not truly JBOFs because they do have (from the VAST spec sheet):

- 2x embedded active/active servers, each with two Intel CPUs and the necessary hardware to support failover

- 4x 100 gigabit NICs, either operating using RoCE or InfiniBand

- 38x 15.36 TB U.2 SSD carriers. These are actually carriers that take multiple M.2 SSDs.

- 18x 960 GB U.2 Intel Optane SSDs

However they are not intelligent. They are not RAID controllers nor do they do any data motion between the SSDs they host. They literally serve each device out to the network and that's it.

I/O Servers

I/O servers are where the magic happens, and they are physically discrete servers that

- share the same SAN fabric as the JBOFs and speak NVMeoF on one side, and

- share a network with client nodes and talk NFS on the other side

These I/O servers are completely stateless; all the data stored by VAST is stored in the JBOFs. The I/O servers have no caches; their job is to turn NFS requests from compute nodes into NVMeoF transfers to JBOFs. Specifically, they perform the following functions:

- Determine which NVMeoF device(s) to talk to to serve an incoming I/O request from an NFS client. This is done using a hashing function.

- Enforce file permissions, ACLs, and everything else that an NFS client would expect.

- Transfer data to/from SSDs, and transfer data to/from 3D XPoint drives.

- Transfer data between SSDs and 3D XPoint drives. This happens as part of the regular write path, to be discussed later.

- Perform "global compression" (discussed later), rebuilds from parity, and other maintenance tasks.

It is also notable that I/O servers do not have an affinity to specific JBOFs as a result of the hash-based placement of data across NVMeoF targets. They are all simply stateless worker bees that process I/O requests from clients and pass them along to the JBOFs. As such, they do not need to communicate with each other or synchronize in any way.

System Composition

Because I/O servers are stateless and operate independently, they can be dynamically added (and removed) from the system at any time to increase or decrease the I/O processing power available to clients. VAST's position is that the peak I/O performance to the JBOFs is virtually always CPU limited since the data path between CPUs (in the I/O servers) and the storage devices (in JBOFs) uses NVMeoF. This is a reasonable assertion since NVMeoF is extremely efficient at moving data as a result of its use of RDMA and simple block-level access semantics.

At the same time, this design requires that every I/O server be able to communicate with every SSD in the entire VAST system via NVMeoF. This means that each I/O server mounts every SSD at the same time; in a relatively small two-JBOF system, this results in 112x NVMe targets on every I/O server. This poses two distinct challenges:

- From an implementation standpoint, this is pushing the limits of how many NVMeoF targets a single Linux host can effectively manage in practice. For example, a 10 PB VAST system will have over 900 NVMeoF targets mounted on every single I/O server. There is no fundamental limitation here, but this scale will exercise pieces of the Linux kernel in ways it was never designed to be used.

- From a fundamental standpoint, this puts tremendous pressure on the storage network. Every I/O server has to talk to every JBOF as a matter of course, resulting in a network dominated by all-to-all communication patterns. This will make performance extremely sensitive to topology, and while I wouldn't expect any issues at smaller scales, high-diameter fat trees will likely see these sensitivities manifest. The Lustre community turned to fine-grained routing to counter this exact issue on fat trees. Fortunately, InfiniBand now has adaptive routing that I expect will bring much more forgiveness to this design.

This said, VAST has tested their architecture at impressively large scale and has an aggressive scale-out validation strategy.

Shared-everything consistency

Mounting every block device on every server may also sound like anathema to anyone familiar with block-based SANs, and generally speaking, it is. NVMeoF (and every other block-level protocol) does not really have locking, so if a single device is mounted by two servers, it is up to those servers to communicate with each other to ensure they aren't attempting to modify the same blocks at the same time. Typical shared-block configurations manage this by simply assigning exclusive ownership of each drive to a single server and relying on heartbeating or quorum (e.g., in HA enclosures or GPFS) to decide when to change a drive's owner. StorNext (formerly CVFS) allows all clients to access all devices, but it uses a central metadata server to manage locks.

VAST can avoid a lot of these problems by simply not caching any I/Os on the I/O servers and instead passing NFS requests through as NVMeoF requests. This is not unlike how parallel file systems like PVFS (now OrangeFS) avoided the lock contention problem; not using caches dramatically reduces the window of time during which two conflicting I/Os can collide. VAST also claws back some of the latency penalties of doing this sort of direct I/O by issuing all writes to nonvolatile memory instead of flash; this will be discussed later.

For the rare cases where two I/O servers are asked to change the same piece of data at the same time though, there is a mechanism by which an extent of a file (which is on the order of 4 KiB) can be locked. I/O servers will flip a lock bit for that extent in the JBOF's memory using an atomic RDMA operation before issuing an update to serialize overlapping I/Os to the same byte range.

VAST also uses redirect-on-write to ensure that writes are always consistent. If a JBOF fails before an I/O is complete, presumably any outstanding locks evaporate since they are resident only in RAM. Any changes that were in flight simply get lost because the metadata structure that describes the affected file's layout only points to updated extents after they have been successfully written. Again, this redirect-on-complete is achieved using an atomic RDMA operation, so data is always consistent. VAST does not need to maintain a write journal as a result.

It is not clear to me what happens to locks in the event that an I/O server fails while it has outstanding I/Os. Since I/O servers do not talk to each other, there is no means by which they can revoke locks or probe each other for timeouts. Similarly, JBOFs are dumb, so they cannot expire locks.

The VAST write path

I think the most meaningful way to demonstrate how VAST employs parity and compression while maintaining low latency is to walk through each step of the write path and show what happens between the time an application issues a write(2) call and the time that write call returns.

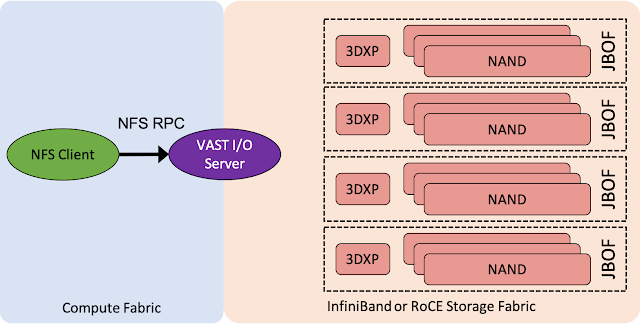

First, an application on a compute node issues a write(2) call on an open file that happens to reside on an NFS mount that points to a VAST server. That write flows through the standard Linux NFS client stack and eventually results in an NFS RPC being sent over the wire to a VAST server. Because VAST clients use the standard Linux NFS client there are a few standard limitations. For example,

- There is no parallel I/O from the client. A single client cannot explicitly issue writes to multiple I/O servers. Instead, some sort of load balancing technique must be inserted between the client and servers.

- VAST violates POSIX because it only ensures NFS close-to-open consistency. If two compute nodes try to modify the same 4 KiB range of the same file at the same time, the result will be corrupt data. VAST's server-side locking cannot prevent this because it happens at the client side. The best way around this is to force all I/O destined to a VAST file system to use direct I/O (e.g., open with O_DIRECT).

Pictorially, it might look something like this:

|

| Step 1 of VAST write path: client issues a standard NFS RPC to a VAST I/O server |

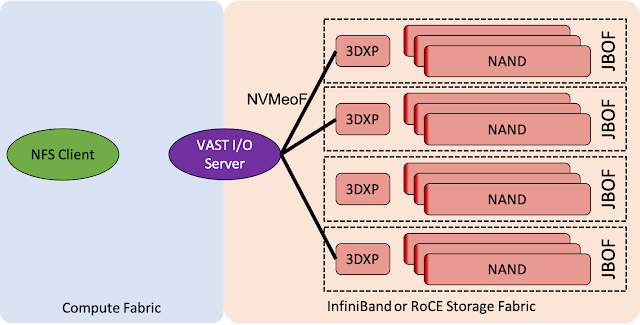

Then the VAST I/O server receives the write RPC and has to figure out to which NVMeoF device(s) the data should be written. This is done by first determining on which NVMe device the appropriate file's metadata is located. This metadata is stored in B-tree like data structures with a very wide fan-out ratio and whose roots are mapped to physical devices algorithmically. Once an I/O server knows which B-tree to begin traversing to find a specific file's metadata algorithmically, it begins traversing that tree to find the file, and then find the location of that file's extents. The majority of these metadata trees live in 3D XPoint, but very large file systems may have their outermost levels stored in NAND.

A key aspect of VAST's architecture is that writes always land on 3D XPoint first; this narrows down the possible NVMeoF targets to those which are storage-class memory devices.

Pictorially, this second step may look something like this:

VAST uses 3D XPoint for two distinct roles:

Pictorially, this second step may look something like this:

|

| Step 2 of VAST write path: I/O server forwards write to 3D XPoint devices. Data is actually triplicated at this point for reasons that will be explained later. |

- Temporarily store all incoming writes

- Store the metadata structures used to describe files and where the data for files reside across all of the NVMe devices

VAST divides 3D XPoint used for case #1 into buckets. Buckets are used to group data based on how long that data is expected to persist before being erased; incoming writes that will be written once and never erased go into one bucket, while incoming writes that may be overwritten (erased) in a very short time will go into another. VAST is able to make educated guesses about this because it knows many user-facing features of the file (its parent directory, extension, owner, group, etc) to which incoming writes are being written, and it tracks file volatility over time.

Data remains in a 3D XPoint bucket until that bucket is full. The bucket is full when its size can be written to the NAND SSDs such that entire SSD erase blocks (which VAST claims can be on the order of a gigabyte in size) can be written down to NAND at once. Since JBOFs are dumb, this actually results in I/O servers reading back a full bucket out of 3D XPoint:

- the combined volatility-based bucketing of data (similarly volatile data tends to reside in the same erase block), and

- VAST's redirect-on-write nature (data is never overwritten; updated data is simply written elsewhere and the file's metadata is updated to point to the new data).

Because VAST relies on cheap consumer NAND SSDs, the data is not safe in the event of a power loss even after the NAND SSD claims the data is persisted. As a result, VAST then forces each NAND SSD to flush its internal caches to physical NAND. Once this flush command returns, the SSDs have guaranteed that the data is power fail-safe. VAST then deletes the bucket contents from 3D XPoint:

|

| Step 5 of the VAST write path: Once data is truly persisted and safe in the event of power loss, VAST purges the original copy of that bucket that resides on the 3D XPoint. |

The metadata structures for all affected files are updated to point at the version of the data that now resides on NAND SSDs, and the bucket is free to be filled by the next generation of incoming writes.

Data Protection

These large buckets also allow VAST to use extremely wide striping for data protection. As writes come in and fill buckets, large stripes are also being built with a minimum of 40+4 parity protection. Unlike in a traditional RAID system where stripes are built in memory, VAST's use of nonvolatile memory (3D XPoint) to store partially full buckets allow very wide stripes to be built over larger windows of time without exposing the data to loss in the event of a power failure. Partial stripe writes never happen because, by definition, a stripe is only written down to flash once it is full.

Bucket sizes (and by extension, stripe sizes) are variable and dynamic. VAST will opportunistically write down a stripe as erase blocks become available. As the number of NVMe devices in the VAST system increases (e.g., more JBOFs are installed), stripes can become wider. This is advantageous when one considers the erasure coding scheme that VAST employs; rather than use a Reed-Solomon code, they have developed their own parity algorithm that allows blocks to be rebuilt from only a subset of the stripe. An example stated by VAST is that a 150+4 stripe only requires 25% of the remaining data to be read to rebuild. As pointed out by Shuki Bruck though, this is likely a derivative of the Zigzag coding scheme introduced by Tamo, Wang, and Bruck in 2013, where a data coded using N+M parity only require (N+M)/M reads to rebuild.

To summarize, parity-protected stripes are slowly built in storage-class memory over time from bits of data that are expected to be erased at roughly the same time. Once a stripe is fully built in 3D XPoint, it is written down to the NAND devices. As a reminder, I/O servers are responsible for moderating all of this data movement and parity generation; the JBOFs are dumb and simply offer up the 3D XPoint targets.

To protect data as stripes are being built, the contents of the 3D XPoint layer are simply triplicated. This is to say that every partially built stripe's contents appear on three different 3D XPoint devices.

Performance Expectations

This likely has a profound effect on the write performance of VAST; if a single 1 MB write is issued by an NFS client, the I/O server must write 3 MB of data to three different 3D XPoint devices. While this would not affect latency by virtue of the fact that the I/O server can issue NVMeoF writes to multiple JBOFs concurrently, this means that the NICs facing the backend InfiniBand fabric must be able to inject data three times as fast as data arriving from the front-end, client-facing network. Alternatively, VAST is likely to carry an intrinsic 3x performance penalty to writes versus reads.

There are several factors that will alter this in practice:

- Both 3D XPoint SSDs and NAND SSDs have higher read bandwidth than write bandwidth as a result of the power consumption associated with writes. This will further increase the 3:1 read:write performance penalty.

- VAST always writes to 3D XPoint but may often read from NAND. This closes the gap in theory, since 3D XPoint is significantly faster at both reads and writes than NAND is at reads in most cases. However the current 3D XPoint products on the market are PCIe-attached and limited to PCIe Gen3 speeds, so there is not a significant bandwidth advantage to 3D XPoint writes vs. NAND reads.

It is also important to point out that VAST has yet to publicly disclose any performance numbers. However, using replication to protect writes is perhaps the only viable strategy to deliver extremely high IOPS without sacrificing data protection. WekaIO, which also aims to deliver extremely high IOPS, showed a similar 3:1 read:write performance skew in their IO-500 submission in November. While WekaIO uses a very different approach to achieving low latency at scale, their benchmark numbers indicate that scalable file systems that optimize for IOPS are likely to sacrifice write throughput to achieve this. VAST's architecture and choice to replicate writes is in line with this expectation, but until VAST publishes performance numbers, this is purely speculative. I would like to be proven wrong.

Because every I/O server sees every NVMe device, it can perform global compression. Typical compression algorithms are designed only to compress adjacent data within a fixed block size, which means similar but physically disparate blocks cannot be reduced. VAST tracks a similarity value for extents in its internal metadata and will group these similar extents before compressing them. I envision this to work something like a Burrows-Wheeler transformation (it is definitely not one though) and conceptually combines the best features of compression and deduplication. I have to assume this compression happens somewhere in the write path (perhaps as stripes are written to NAND), but I don't understand this in any detail.

The exact compression algorithm is one of VAST's own design, and it is not block-based as a result of VAST not having a fixed block size. This means that decompression is also quite different from block-based compression; according to VAST, their algorithm can decompress only a local subset of data such that reads do not require similar global decompression. The net result is that read performance of compressed data is not significantly compromised. VAST has a very compelling example where they compressed data that was already compressed and saw a significant additional capacity savings as a result of the global nature of their algorithm. While I normally discount claims of high compression ratios since they never hold up for scientific data, the conceptual underpinnings of VAST's approach to compression sounds promising.

VAST is also very closely tied to byte-addressable nonvolatile storage from top to bottom, and much of this is a result of their B-tree-based file system metadata structure. They refer to their underlying storage substrate as an "element store" (which I imagine to be similar to a key-value store), and it sounds like it is designed to store a substantial amount of metadata per file. In addition to standard POSIX metadata and the pointers to data extents on various NVMe devices, VAST also stores user metadata (in support of their S3 interface) and internal metadata (such as heuristics about file volatility, versioning for continuous snapshots, etc). This element store API is not exposed to customers, but it sounds like it is sufficiently extensible to support a variety of other access APIs beyond POSIX and S3.

Its design does rely on an extremely robust backend RDMA fabric, and the way in which every I/O server must mount every storage device sounds like a path to scalability problems--both in terms of software support in the Linux NVMeoF stack and fundamental sensitivities to topology inherent in large, high-diameter RDMA fabrics. The global all-to-all communication patterns and choice to triplicate writes make the back-end network critically important to the overall performance of this architecture.

That said, the all-to-all ("shared everything") design of VAST brings a few distinct advantages as well. As the system is scaled to include more JBOFs, the global compression scales as well and can recover an increasing amount of capacity. Similarly, data durability increases as stripes can be made wider and be placed across different failure domains. In this sense, the efficiency of the system increases as it gets larger due to the global awareness of data. VAST's choice to make the I/O servers stateless and independent also adds the benefit of being able to scale the front-end capability of the system independently of the back-end capacity. Provided the practical and performance challenges of scaling out described in the previous paragraph do not manifest in reality, this bigger-is-better design is an interesting contrast to the mass storage systems of today which, at best, do not degrade as they scale out. Unfortunately, VAST has not disclosed any performance or scaling numbers, so the proof will be in the pudding.

However, VAST has hinted that the costs are "one fifth to one eighth" of enterprise flash today; by their own estimates of today's cost of enterprise flash, this translates to a cost of between $0.075 and $0.12 per gigabyte of flash when deployed in a VAST system. This remains 3x-5x more expensive than spinning disk today, but the cost of flash is dropping far faster than the cost of hard drives, so the near-term future may truly make VAST cost-comparable to disk. As flash prices continue to plummet though, the VAST cost advantage may become less dramatic over datacenter flash, but their performance architecture will remain compelling when compared to a traditional disk-oriented networked file system.

As alluded above, VAST is not the first company to develop a file-based storage system designed specifically for flash, and they share many similar architectural design patterns with their competition. This is creating gravity around a few key concepts:

Other Bells and Whistles

The notes presented above are only a small part of the full VAST architecture, and since I am no expert on VAST, I'm sure there's even more that I don't realize I don't know or fully understand. That said, I'll highlight a few examples of which I am tenuously aware:Because every I/O server sees every NVMe device, it can perform global compression. Typical compression algorithms are designed only to compress adjacent data within a fixed block size, which means similar but physically disparate blocks cannot be reduced. VAST tracks a similarity value for extents in its internal metadata and will group these similar extents before compressing them. I envision this to work something like a Burrows-Wheeler transformation (it is definitely not one though) and conceptually combines the best features of compression and deduplication. I have to assume this compression happens somewhere in the write path (perhaps as stripes are written to NAND), but I don't understand this in any detail.

The exact compression algorithm is one of VAST's own design, and it is not block-based as a result of VAST not having a fixed block size. This means that decompression is also quite different from block-based compression; according to VAST, their algorithm can decompress only a local subset of data such that reads do not require similar global decompression. The net result is that read performance of compressed data is not significantly compromised. VAST has a very compelling example where they compressed data that was already compressed and saw a significant additional capacity savings as a result of the global nature of their algorithm. While I normally discount claims of high compression ratios since they never hold up for scientific data, the conceptual underpinnings of VAST's approach to compression sounds promising.

VAST is also very closely tied to byte-addressable nonvolatile storage from top to bottom, and much of this is a result of their B-tree-based file system metadata structure. They refer to their underlying storage substrate as an "element store" (which I imagine to be similar to a key-value store), and it sounds like it is designed to store a substantial amount of metadata per file. In addition to standard POSIX metadata and the pointers to data extents on various NVMe devices, VAST also stores user metadata (in support of their S3 interface) and internal metadata (such as heuristics about file volatility, versioning for continuous snapshots, etc). This element store API is not exposed to customers, but it sounds like it is sufficiently extensible to support a variety of other access APIs beyond POSIX and S3.

Take-away Messages

VAST is an interesting new all-flash storage system that resulted from taking a green-field approach to storage architecture. It uses a number of new technologies (storage-class memory/3D XPoint, NAND, NVMe over fabrics) in intellectually satisfying ways, and builds on them using a host of byte-granular algorithms. It looks like it is optimized for both cost (in its intelligent optimization of flash endurance) and latency (landing I/Os on 3D XPoint and using triplication) which have been traditionally difficult to optimize together.Its design does rely on an extremely robust backend RDMA fabric, and the way in which every I/O server must mount every storage device sounds like a path to scalability problems--both in terms of software support in the Linux NVMeoF stack and fundamental sensitivities to topology inherent in large, high-diameter RDMA fabrics. The global all-to-all communication patterns and choice to triplicate writes make the back-end network critically important to the overall performance of this architecture.

That said, the all-to-all ("shared everything") design of VAST brings a few distinct advantages as well. As the system is scaled to include more JBOFs, the global compression scales as well and can recover an increasing amount of capacity. Similarly, data durability increases as stripes can be made wider and be placed across different failure domains. In this sense, the efficiency of the system increases as it gets larger due to the global awareness of data. VAST's choice to make the I/O servers stateless and independent also adds the benefit of being able to scale the front-end capability of the system independently of the back-end capacity. Provided the practical and performance challenges of scaling out described in the previous paragraph do not manifest in reality, this bigger-is-better design is an interesting contrast to the mass storage systems of today which, at best, do not degrade as they scale out. Unfortunately, VAST has not disclosed any performance or scaling numbers, so the proof will be in the pudding.

However, VAST has hinted that the costs are "one fifth to one eighth" of enterprise flash today; by their own estimates of today's cost of enterprise flash, this translates to a cost of between $0.075 and $0.12 per gigabyte of flash when deployed in a VAST system. This remains 3x-5x more expensive than spinning disk today, but the cost of flash is dropping far faster than the cost of hard drives, so the near-term future may truly make VAST cost-comparable to disk. As flash prices continue to plummet though, the VAST cost advantage may become less dramatic over datacenter flash, but their performance architecture will remain compelling when compared to a traditional disk-oriented networked file system.

As alluded above, VAST is not the first company to develop a file-based storage system designed specifically for flash, and they share many similar architectural design patterns with their competition. This is creating gravity around a few key concepts:

- Both flash and RDMA fabrics handle kilobyte-sized transfers with grace, so the days of requiring megabyte-sized I/Os to achieve high bandwidth are nearing an end.

- The desire to deliver high IOPS makes replication an essential part of the data path which will skew I/O bandwidth towards reads. This maps well for read-intensive workloads such as those generated by AI, but this does not bode as well for write-intensive workloads of traditional modeling and simulation.

- Reserving CPU resources exclusively for driving I/O is emerging as a requirement to get low-latency and predictable I/O performance with kilobyte-sized transfers. Although not discussed above, VAST uses containerized I/O servers to isolate performance-critical logic from other noise on the physical host. This pattern maps well to the notion that in exascale, there will be an abundance of computing power relative to the memory bandwidth required to feed computations.

- File-based I/O is not entirely at odds with very low-latency access, but this file-based access is simple one of many interfaces exposed atop a more flexible key-value type of data structure. As such, as new I/O interfaces emerge to serve the needs of extremely latency-sensitive workloads, these flexible new all-flash storage systems can simply expose their underlying performance through other non-POSIX APIs.

Finally, if you've gotten this far, it is important to underscore that I am in no way speaking authoritatively about anything above. If you are really interested in VAST or related technologies, don't take it from me; talk to the people and companies developing them directly.