Reviewing the state of the art of burst buffers

Just over two years ago I attended my first DOE workshop as a guest representative of the NSF supercomputing centers, and I wrote a post that summarized my key observations of how the DOE was approaching the increase in data-intensive computing problems. At the time, the most significant thrusts seemed to be

<ol><li>understanding scientific workflows to keep pace with the need to process data in complex ways</li><li>deploying burst buffers to overcome the performance limitations of spinning disk relative to the increasing scale of simulation data</li><li>developing methods and processes to curate scientific data</li></ol>Here we are now two years later, and these issues still take center stage in the discussion surrounding the future of data-intensive computing. The DOE has made significant progress in defining its path forward in these areas though, and in particular, both the roles of burst buffers and scientific workflows have a much clearer focus on DOE’s HPC roadmap. Burst buffers in particular are becoming a major area of interest since they are now becoming commercially available, so in the interests of updating some of the incorrect or incomplete thoughts I wrote about two years ago, I thought I’d write about the current state of the art in burst buffers in HPC.

Two years ago I had observed that there were two major camps in burst buffer implementations: one that is more tightly integrated with the compute side of the platform that utilizes explicit allocation and use, and another that is more closely integrated with the storage subsystem and acts as a transparent I/O accelerator. Shortly after I made that observation though, Oak Ridge and Lawrence Livermore announced their GPU-based leadership systems, Summit and Sierra, which would feature a new type of burst buffer design altogether that featured on-node nonvolatile memory.

This CORAL announcement, combined with the deployment of production, large-scale burst buffers at NERSC, Los Alamos, and KAUST, has led me to re-think my taxonomy of burst buffers. Specifically, it really is important to divide burst buffers into their hardware architectures and software usage modes; different burst buffer architectures can provide the same usage modalities to users, and different modalities can be supported by the same architecture.

<div>

</div>

For the sake of laying it all out, let’s walk through the taxonomy of burst buffer hardware architectures and burst buffer software usage modalities.

<h2>Burst Buffer Hardware Architectures</h2>First, consider your typical medium- or large-scale HPC system architecture without a burst buffer:

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

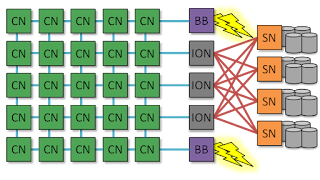

In this design, you have

<ul><li>Compute Nodes (CN), which might be commodity whitebox nodes like the Dell C6320 nodes in SDSC’s Comet system or Cray XC compute blades</li><li>I/O Nodes (ION), which might be commodity Lustre LNET routers (commodity clusters), Cray DVS nodes (Cray XC), or CIOD forwarders (Blue Gene)</li><li>Storage Nodes (SN), which might be Lustre Object Storage Servers (OSSes) or GPFS Network Shared Disk (NSD) servers</li><li>The compute fabric (blue lines), which is typically Mellanox InfiniBand, Intel OmniPath, or Cray Aries</li><li>The storage fabric (red lines), which is typically Mellanox InfiniBand or Intel OmniPath</li></ul>

Given all these parts, there are a bunch of different places you can stick flash devices to create a burst buffer. For example…

<h3>ION-attached Flash</h3>You can put SSDs inside IO nodes, resulting in an ION-attached flash architecture that looks like this:

<div class="separator" style="clear: both; text-align: center;"> </div>

</div>

Gordon, which was the first large-scale deployment of what one could call a burst buffer, had this architecture. The flash was presented to the compute nodes as block devices using iSCSI, and a compute node could have anywhere between zero and sixteen SSDs mounted to it entirely via software. More recently, the Tianhe-2 system at NUDT also deployed this architecture and exposes the flash to user applications via their H2FS middleware.

<h3>Fabric-attached Flash</h3>A very similar architecture is to add specific burst buffer nodes on the compute fabric that don’t route I/O, resulting in a fabric-attached flash architecture:

<div class="separator" style="clear: both; text-align: center;"> </div>

Like the ION-attached flash design of Gordon, the flash is still embedded within the compute fabric and is logically closer to the compute nodes than the storage nodes. Cray’s DataWarp solution uses this architecture.

</div>

Like the ION-attached flash design of Gordon, the flash is still embedded within the compute fabric and is logically closer to the compute nodes than the storage nodes. Cray’s DataWarp solution uses this architecture.

Because the flash is still on the compute fabric, this design is very similar to ION-attached flash and the decision to chose it over the ION-attached flash design is mostly non-technical. It can be more economical to embed flash directly in I/O nodes if those nodes have enough peripheral ports (or physical space!) to support the NICs for the compute fabric, the NICs for the storage fabric, and the flash devices. However as flash technology moves away from being attached via SAS and towards being directly attached to PCIe, it becomes more difficult to stuff that many high-performance peripherals into a single box without imbalancing something. As such, it is likely that fabric-attached flash architectures will replace ION-attached flash going forward.

Fortunately, any burst buffer software designed for ION-attached flash designs will also probably work on fabric-attached flash designs just fine. The only difference is that the burst buffer software will no longer have to compete against the I/O routing software for on-node resources like memory or PCIe bandwidth.

<h3>CN-attached Flash</h3>A very different approach to building burst buffers is to attach a flash device to every single compute node in the system, resulting in a CN-attached flash architecture:

<div><div class="separator" style="clear: both; text-align: center;"> </div>

</div>

This design is neither superior nor inferior to the ION/fabric-attached flash design. The advantages it has over ION/fabric-attached flash include

<ul><li>Extremely high peak I/O performance -The peak performance scales linearly with the number of compute nodes, so the larger your job, the more performance your job can have.</li><li>Very low variation in I/O performance - Because each compute node has direct access to its locally attached SSD, contention on the compute fabric doesn’t affect I/O performance.</li></ul><div>However, these advantages come at a cost:</div>

- Limited support for shared-file I/O - Because each compute node doesn't share its SSD with other compute nodes, having many compute nodes write to a single shared file is not a straightforward process. The solution to this issue include from such N-1 style I/O being simply impossible (the default case), relying on I/O middleware like the SCR library to manage data distribution, or relying on sophisticated I/O services like Intel CPPR to essentially journal all I/O to the node-local flash and flush it to the parallel file system asynchronously.

- Data movement outside of jobs becomes difficult - Burst buffers allow users to stage data into the flash before their job starts and stage data back to the parallel file system after their job ends. However in CN-attached flash, this staging will occur while someone else's job might be using the node. This can cause interference, capacity contention, or bandwidth contention. Furthermore, it becomes very difficult to persist data on a burst buffer allocation across multiple jobs without flushing and re-staging it.

- Node failures become more problematic - The point of writing out a checkpoint file is to allow you to restart a job in case one of its nodes fails. If your checkpoint file is actually stored on one of the nodes that failed, though, the whole checkpoint gets lost when a node fails. Thus, it becomes critical to flush checkpoint files to the parallel file system as quickly as possible so that your checkpoint file is safe if a node fails. Realistically though, most application failures are not caused by node failures; a study by LLNL found that 85% of job interrupts do not take out the whole node.

- Performance cannot be decoupled from job size - Since you get more SSDs by requesting more compute nodes, there is no way to request only a few nodes and a lot of SSDs. While this is less an issue for extremely large HPC jobs whose I/O volumes typically scale linearly with the number of compute nodes, data-intensive applications often have to read and write large volumes of data but cannot effectively use a huge number of compute nodes.

- Relatively low node count, so that you aren't buying way more SSD capacity or performance than you can realistically use given the bandwidth of the parallel file system to which the SSDs must eventually flush

- Relatively beefy compute nodes, so that the low node count doesn't hurt you and so that you can tolerate running I/O services to facilitate the asynchronous staging of data and middleware to support shared-file I/O

- Relatively beefy network injection bandwidth, so that asynchronous stage in/out doesn't severely impact the MPI performance of the jobs that run before/after yours

- Relatively large job sizes on average, so that applications routinely use enough compute nodes to get enough I/O bandwidth. Small jobs may be better off using the parallel file system directly, since parallel file systems can usually deliver more I/O bandwidth to smaller compute node counts.

- Relatively low diversity of applications, so that any applications that rely on shared-file I/O (which is not well supported by CN-attached flash, as we'll discuss later) can either be converted into using the necessary I/O middleware like SCR, or can be restructured to use only file-per-process or not rely on any strong consistency semantics.

Storage Fabric-attached Flash

- it moves the flash far away from the compute node, which is counterproductive to low latency

- it requires that the I/O forwarding layer (the IONs) support enough bandwidth to saturate the burst buffer, which can get expensive

Burst Buffer Software

Common Software Features

- Stage-in and stage-out - Burst buffers are designed to make a job's input data already be available on the burst buffer immediately when the job starts, and to allow the flushing of output data to the parallel file system after the job ends. To make this happen, the burst buffer service must give users a way to indicate what files they want to be available on the burst buffer when they submit their job, and they must also have a way to indicate what files they want to flush back to the file system after the job ends.

- Background data movement - Burst buffers are also not designed to be long-term storage, so their reliability can be lower than the underlying parallel file system. As such, users must also have a way to tell the burst buffer to flush intermediate data back to the parallel file system while the job is still running. This should happen using server-to-server copying that doesn't involve the compute node at all.

- POSIX I/O API compatibility - The vast majority of HPC applications rely on the POSIX I/O API (open/close/read/write) to perform I/O, and most job scripts rely on tools developed for the POSIX I/O API (cd, ls, cp, mkdir). As such, all burst buffers provide the ability to interact with data through the POSIX I/O API so that they look like regular old file systems to user applications. That said, the POSIX I/O semantics might not be fully supported; as will be described below, you may get an I/O error if you try to perform I/O in a fashion that is not supported by the burst buffer.

Transparent Caching Mode

$ ls /mnt/lustre/glock

bin project1 project2 public_html src

### Burst buffer mount point contains the same stuff as Lustre

$ ls /mnt/burstbuffer/glock

bin project1 project2 public_html src

### Create a file on Lustre...

$ touch /mnt/lustre/glock/hello.txt

$ ls /mnt/lustre/glock

bin hello.txt project1 project2 public_html src

### ...and it automatically appears on the burst buffer.

$ ls /mnt/burstbuffer/glock

bin hello.txt project1 project2 public_html src

### However its contents are probably not on the burst buffer's flash

### yet since we haven't read its contents through the burst buffer

### mount point, which is what would cause it to be cached

However, if I access a file through the burst buffer mount (

/mnt/burstbuffer/glock) rather than the parallel file system mount (/mnt/lustre/glock),- if hello.txt is already cached on the burst buffer's SSDs, it will be read directly from flash

- if hello.txt is not already cached on the SSDs, the burst buffer will read it from the parallel file system, cache its contents on the SSDs, and return its contents to me

Private PFS Mode

Although the transparent caching mode is the easiest to use, it doesn't give users a lot of control over what data does or doesn't need to be staged into the burst buffer. Another access mode involves creating a private parallel file system on-demand for jobs, which I will call private PFS mode. It provides a new parallel file system that is only mounted on your job's compute nodes, and this mount point contains only the data you explicitly copy to it:### Burst buffer mount point is empty; we haven't put anything there,

### and this file system is private to my job

$ ls /mnt/burstbuffer

### Create a file on the burst buffer file system...

$ dd if=/dev/urandom of=/mnt/burstbuffer/mydata.bin bs=1M count=10

10+0 records in

10+0 records out

10485760 bytes (10 MB) copied, 0.776115 s, 13.5 MB/s

### ...it appears on the burst buffer file system...

$ ls -l /mnt/burstbuffer

-rw-r----- 1 glock glock 10485760 Jan 1 00:00 mydata.bin

### ...and Lustre remains entirely unaffected

$ ls /mnt/lustre/glock

bin project1 project2 public_html src

In addition, the burst buffer private file system is strongly consistent; as soon as you write data out to it, you can read that data back from any other node in your compute job. While this is true of transparent caching mode if you always access your data through the burst buffer mount point, you can run into trouble if you accidentally try to read a file from the original parallel file system mount point after writing out to the burst buffer mount. Since private PFS mode provides a completely different file system and namespace, it's a bit harder to make this mistake.

Cray's DataWarp implements private PFS mode, and the Tsubame 3.0 burst buffer will be implementing private PFS mode using on-demand BeeGFS. This mode is most easily implemented on fabric/ION-attached flash architectures, but Tsubame 3.0 is demonstrating that it can also be done on CN-attached flash.

Log-structured/Journaling Mode

As probably the least user-friendly but highest-performing use mode, log-structured (or journaling) mode burst buffers present themselves to users like a file system, but they do not support the full extent of file system features. Under the hood, writes are saved to the flash not as files, but as records that contain a timestamp, the data to be written, and the location in the file to which the data should be written. These logs are continually appended as the application performs its writes, and when it comes time to flush the data to the parallel file system, the logs are replayed to effectively reconstruct the file that the application was trying to write.This can perform extremely well since even random I/O winds up being restructured as sequentially appended I/O. Furthermore, there can be as many logs as there are writers; this allows writes to happen with zero lock contention, since contended writes are resolved out when the data is re-played and flushed.

Unfortunately, log-structured writes make reading very difficult, since the read can no longer seek directly to a file offset to find the data it needs. Instead, the log needs to be replayed to some degree, effectively forcing a flush to occur. Furthermore, if the logs are spread out across different logical flash domains (as would happen in CN-attached flash architectures), read-back may require the logs to be centrally collected before the replay can happen, or it may require inter-node communication to coordinate who owns the different bytes that the application needs to read.

What this amounts to is functionality that may present itself like a private parallel file system burst buffer, but behaves very differently on reads and writes. For example, attempting to read the data that exists in a log that doesn't belong to the writer might generate an I/O error, so applications (or I/O middleware) probably need to have very well-behaved I/O to get the full performance benefits of this mode. Most extreme-scale HPC applications already do this, so log-structured/journaling mode is a very attractive approach for very large applications that rely on extreme write performance to checkpoint their progress.

Log-structured/journaling mode is well suited for CN-attached flash since logs do not need to live on a file system that presents a single shared namespace across all compute nodes. In practice, the IBM CORAL systems will probably provide log-structured/journaling mode through IBM's burst buffer software. Oak Ridge National Laboratory has also demonstrated a log-structured burst buffer system called BurstMem on a fabric-attached flash architecture. Intel's CPPR library, to be deployed with the Argonne Aurora system, may also implement this functionality atop the 3D XPoint to be embedded in each compute node.

Other Modes

The above three modes are not the only ones that burst buffers may implement, and some burst buffers support more than one of the above modes. For example, Cray's DataWarp, in addition to supporting private PFS and transparent caching modes, also has a swap mode that allows compute nodes to use the flash as swap space to prevent hard failures for data analysis applications that consume non-deterministic amounts of memory. In addition, Intel's CPPR library is targeting byte-addressable nonvolatile memory which would expose a load/store interface, rather than the typical POSIX open/write/read/close interface, to applications.Outlook

</div>